I know only Maxima (of course to little extent). Using whatever I knew, I tried to find the approximate values of known function say $e^x$ using Newton-Gregory forward and backward interpolation formulas. I am facing a problem which I am not sure how to address. Not one, two problems in fact.

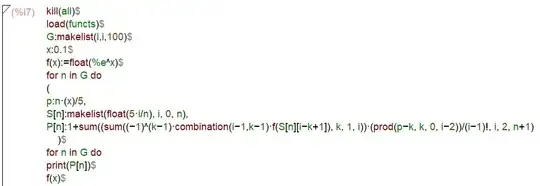

I was taught and many texts teach the same thing without proper rationale that for the data near the beginning of the table, we use forward interpolation formula and for the data towards the end of the table, we use backward one. I chose $f(x) = e^x$ to study what would happen otherwise. I faced a new problem while doing this. I chose the interval $[0,5]$ and if $n$ denoted the number of equally spaced intervals into which $[0,5]$ was divided, I applied Newton-Forward interpolation formula for various values of $n$ from 1 to 100 and recorded the corresponding approximate values of $f$ at $x=0.1$. As far as my guess is right, with the increase in value of $n$, theoretically we should have approached the true value of $f$. However, I see a huge variation in the values of $f$ as $n$ increases. Of course, I know that whatever the data I have with me is not sufficient to conclude that as $n\to \infty$, the approximate value approaches exact value. Yet, I feel something is not right with the data. It behaves perfectly well till $n=54$ and thereafter till $n=99$ something unusual happens and finally for $n=100$, the curve fits the exact value. Is there anything wrong? The command I used is the following one

$\large{\text{What are possible explanations for this?}}$

Secondly, my main search was, why we have two different formulas when the polynomials which they (forward and backward interpolation formulas) represent are same? I searched for an answer to this and ended up in one explanation which ascribed the use two different formulas to infinite arithmetic. But I could not grasp the argument properly. It would be helpful if someone sheds some light on this too. The site I visited was the following one:

Newton's Interpolation Formula: Difference between the forward and the backward formula