I'm studying about EM-algorithm and on one point in my reference the author is taking a derivative of a function with respect to a matrix. Could someone explain how does one take the derivative of a function with respect to a matrix...I don't understand the idea. For example, lets say we have a multidimensional Gaussian function:

$$f(\textbf{x}, \Sigma, \boldsymbol \mu) = \frac{1}{\sqrt{(2\pi)^k |\Sigma|}}\exp\left( -\frac{1}{2}(\textbf{x}-\boldsymbol \mu)^T\Sigma^{-1}(\textbf{x}-\boldsymbol \mu)\right),$$

where $\textbf{x} = (x_1, ..., x_n)$, $\;\;x_i \in \mathbb R$, $\;\;\boldsymbol \mu = (\mu_1, ..., \mu_n)$, $\;\;\mu_i \in \mathbb R$ and $\Sigma$ is the $n\times n$ covariance matrix.

How would one calculate $\displaystyle \frac{\partial f}{\partial \Sigma}$? What about $\displaystyle \frac{\partial f}{\partial \boldsymbol \mu}$ or $\displaystyle \frac{\partial f}{\partial \textbf{x}}$ (Aren't these two actually just special cases of the first one)?

Thnx for any help. If you're wondering where I got this question in my mind, I got it from reading this reference: (page 14)

http://ptgmedia.pearsoncmg.com/images/0131478249/samplechapter/0131478249_ch03.pdf

UPDATE:

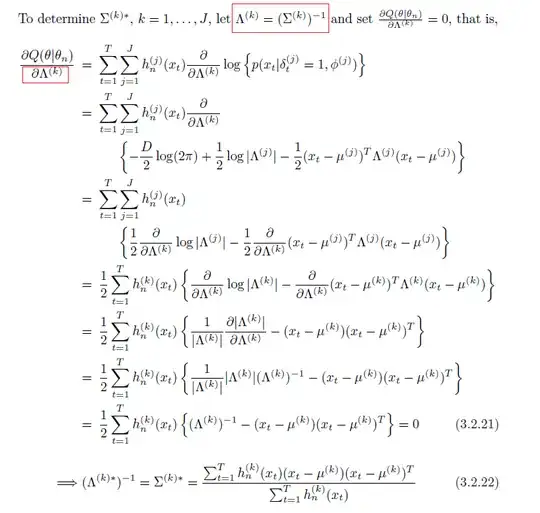

I added the particular part from my reference here if someone is interested :) I highlighted the parts where I got confused, namely the part where the author takes the derivative with respect to a matrix (the sigma in the picture is also a covariance matrix. The author is estimating the optimal parameters for Gaussian mixture model, by using the EM-algorithm):

$Q(\theta|\theta_n)\equiv E_Z\{\log p(Z,X|\theta)|X,\theta_n\}$