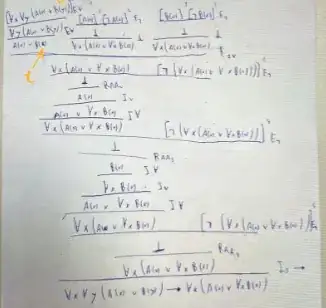

Sorry for the image, but I don't know how to write this type of thing in latex

Just use nested \dfrac{}{}{} , perhaps with hspacing to tidy the alignment.

In any case, your idea to start with disjunction elimination, then prove by reduction to absurdity was a good approach.

However, repeating three RAA discharging the same assumption is a clear hint that you could vastly simplify things.

So, seek to avoid that repetition by pushing the universal introduction downwards and lift its assumption, $\lnot(\alpha(s)\vee\forall x~\beta(x))$, up to somewhere earlier.

To where? Well, on the branch where you assume $\alpha(s)$ you may use disjunction introduction to contradict that assumption. Whenever a contradiction is derivable in one case of a disjunction elimination, you may use explosion to turn it into disjunctive sylogism. So put it there and do that.

Thus, you may derive $\beta(t)$, and from there universal introduction and disjunction introduction will set things up to complete your reduction to absurdity.

Then it is a cinch to wrap things up.

$\def\D#1#2#3{\hspace{-0.4ex}\dfrac{#1}{#2}{#3 }\hspace{-3ex}}

\D{\D{\D{\D{{\lower{1.5ex}{[\lnot(\alpha(s)\lor\forall x~\beta(x))]^\bullet}\hspace{-14ex}\D{\D{\D{\D{\D{[\forall x~\forall y~(\alpha(x)\vee\beta(y))]^\star}{\forall y~(\alpha(s)\lor\beta(y))}{\forall_\mathrm E}}{\alpha(s)\lor\beta(t)}{\forall_\mathrm E}\qquad\D{\D{\lower{1.5ex}{[\lnot(\alpha(s)\lor\forall x~\beta(x))]^\bullet}\quad\D{[\alpha(s)]^\circ}{\alpha(s)\lor\forall x~\beta(x)}{\lor_\mathrm I}}{\bot}{\lnot_\mathrm E}}{\beta(t)}{\bot_\mathrm E}\qquad\lower{1.5ex}{[\beta(t)]^\circ}}{\beta(t)}{\lor_\mathrm E^\circ}}{\forall x~\beta(x)}{\forall_\mathrm I^t}}{\alpha(s)\lor\forall x~\beta(x)}{\lor_\mathrm I}}}{\bot}{\lnot_\mathrm E}}{\alpha(s)\lor\forall x~\beta(x)}{{\small\mathrm {RAA}}^\bullet\hspace{-2ex}}}{\forall x~(\alpha(x)\lor\forall x~\beta(x))}{\forall_\mathrm I^s}}{\forall x~\forall y~(\alpha(x)\lor\beta(y))\to\forall x~(\alpha(x)\lor\forall x~\beta(x))}{{\to}^\star_\mathrm I}\\\blacksquare$