Suppose that you have a uniform distribution in the Interval $I_0$

where $$ I_0 \in [0,1] $$

With this as a starting interval, now you take another interval $I_1$ which is a subset of $I_0$ but is exactly half the length. You repeat this multiple times.

So if your interval $m = n-1$, then

$$ I_n \subset I_m $$

and the length of interval $n$ is half to that of interval $m$

All the intervals are continuous.

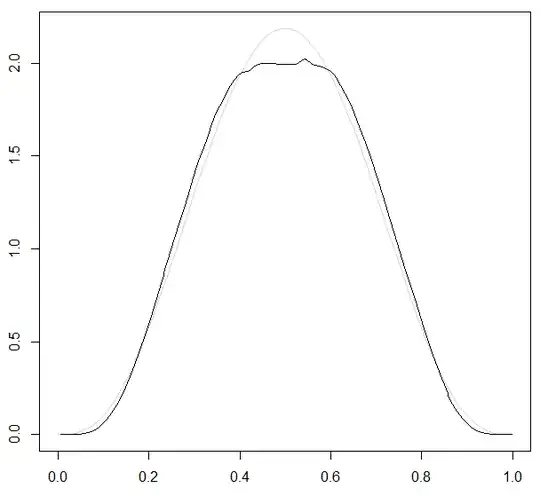

Question -> If $n$ tends to infinity, the interval will converge on a point. Now if you repeat this experiment infinitely many times, you will get infinite such points, which will form a distribution. What is the standard deviation of that distribution?

Note - Since $I_0$ is of length 1, $I_1$ needs to be of length 0.5. So $I_1$ cannot start from (0.5,1], as that would mean that $I_1$ will not be subset of $I_0$. All the possible selections of $I_1$ are equally likely. So, choosing [0.25,0.75], [0.2,0.7],[0,0.5] etc are all equally likely candidates for $I_1$