I came across a problem in a quant interview which read:

Suppose you continually randomly sample nested intervals from

$[0,1]$, halving the size each time. That is, the next interval is

$[x,x+0.5]$, where

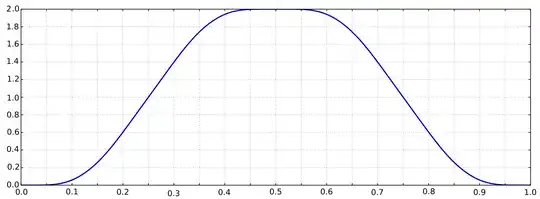

$x∼U(0,0.5),$ and so on. What is the pdf of the point this converges to?

It can be easily calculate that the expection of $X$ is $1/2$ and the Variance is $1/36$. Actually there exists a question about the variance and my approach is exactly the same as below.

My Approach:

We model the problem as a sum of distinct uniform random variables such that the random variables are $U_1 \sim U(0,1/2)$ , $U_2 \sim U(0,1/4)$, $U_3 \sim U(0,1/8)$ ,...., $U_n \sim U(0,1/(2^n))$. Now the point of convergence will be $X= \lim _{i=1} ^{\infty}U_i $

My attempts:

I want to compute the pdf directly through integration and evaluate its limiting behavior, but its tough to tackle with the bounds because of different range among the $U_i$s. $f_{L_N}(x) = \int_0^{\frac{1}{2^1}} \int_0^{\frac{1}{2^2}} \cdots \int_0^{\frac{1}{2^N}} \prod_{n=1}^N 2^n \, \delta\left( x - \sum_{n=1}^N y_n \right) \, dy_1 \, dy_2 \, \cdots \, dy_N.$

From the initial description we can also know the distribution is symmetric about $1/2$.

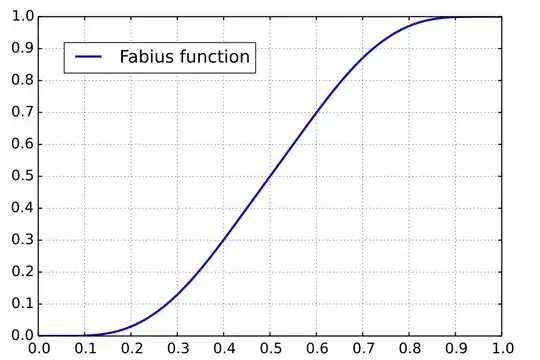

There may be some correspondence between this question and another?

I have tried the moment generating function, characteristic function and so on without progress. Characteristic functions of $U_i$ is $\phi_{X_n}(t) = 2^n \cdot \frac{1}{it} \left(e^{it / 2^n} - 1\right)$, in order to get the pdf we need to compute $f_L(x) = \frac{1}{2\pi} \int_{-\infty}^\infty e^{-itx} \prod_{n=1}^\infty \frac{2^n}{t} \left[\sin\left(\frac{t}{2^n}\right) + i\left(1 - \cos\left(\frac{t}{2^n}\right)\right)\right] \, dt. $

But how can I get the concrete distribution of $X$? I have searched over the Internet and only found that the sum of $n$ $U_i \sim [0,1]$ becomes Irwin-Hall distribution.