This is not a complete answer, but an attempt. Any comments/suggestions are welcome.

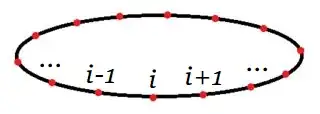

For simplicity, let's drop the $i$ dependence and redefine $Y_j\equiv Y_{|j-i|}$ so that

$$

Z = X_{j^*} + Y_{j^*}

$$

where $j^*$ is the index that minimizes $X_j + Y_j$ over all $j = 1,...,n$.

To find the expected value of $Z$, we need to find the distribution of $X_{j^*} + Y_{j^*}$. Since each $X_j$ and $Y_j$ are independent, $X_{j^*}$ and $Y_{j^*}$ are also independent. Therefore, the pdf of $X_{j^*} + Y_{j^*}$ is given by the convolution

$$f_{X_{j^*}+Y_{j^*}}(z) = \int_{0}^{\infty} f_{X_j}(z-y) \cdot f_{Y_j}(y) , dy

$$

where $j^*$ is the index that minimizes $X_j + Y_j$.

Let's assume that we can find the index $j^*$ and that it is unique (if not, we can redefine $Z$ to be the sum of all $X_j + Y_j$ where $j^*$ corresponds to the minimum value of $X_j + Y_j$). Then, the expected value of $Z$ is

$$

E[Z] = E[X_{j^*} + Y_{j^*}] = \int_{0}^{\infty} z \cdot f_{X_{j^*}+Y_{j^*}}(z) , dz

$$

We can evaluate this integral by breaking it into two parts, one from $0$ to $x^*$ and another from $x^*$ to infinity, where $x^*$ is the minimum value of $X_j + Y_j$. We can find $x^*$ by finding the minimum of each sum $X_j + Y_j$, which is a continuous random variable with pdf given by the convolution

$$

f_{X_j+Y_j}(z) = \int_{0}^{z} f_{X_j}(z-y) \cdot f_{Y_j}(y) , dy

$$

for $j = 1,...,n$.

Then, the expected value of $Z$ is

$$

E[Z] = \int_{0}^{x^*} z \cdot f_{X_{j^*}+Y_{j^*}}(z) , dz

+ \int_{x^*}^{\infty} z \cdot f_{X_{j^*}+Y_{j^*}}(z) , dz

$$

We can evaluate the first integral using the pdf of $X_{j^*} + Y_{j^*}$ given above. To evaluate the second integral, note that

$$

\int_{x^*}^{\infty} z \cdot f_{X_{j^*}+Y_{j^*}}(z) , dz

= \int_{0}^{\infty} z \cdot f_{X_{j^*}+Y_{j^*}}(z) , dz

- \int_{0}^{x^*} z \cdot f_{X_{j^*}+Y_{j^*}}(z) , dz

$$

The first integral is the expected value of $X_{j^*} + Y_{j^*}$, which we can calculate using the convolution formula above. The second integral is the probability that $X_j + Y_j$ is greater than or equal to $x^*$, multiplied by the expected value of $X_{j^*} + Y_{j^*}$ conditioned on $X_{j^*} + Y_{j^*} \geq x^*$. That is,

$$

\int_{0}^{x^*} z \cdot f_{X_{j^*}+Y_{j^*}}(z) , dz

= P(X_{j^*} + Y_{j^*} \geq x^*) \cdot E[X_{j^*}+Y_{j^*} \,|\, X_{j^*}+Y_{j^*} \geq x^*]

$$

The probability that $X_{j^*} + Y_{j^*}$ is greater than or equal to $x^*$ is given by

$$

P(X_{j^*} + Y_{j^*} \geq x^*) = 1 - P(X_{j^*} + Y_{j^*} < x^*)

$$

$$

= 1 - \int_{0}^{x^*} f_{X_{j^*}+Y_{j^*}}(z) , dz

$$

We can evaluate this integral using the convolution formula for the pdf of $X_j + Y_j$.

The conditional expected value of $X_{j^*} + Y_{j^*}$, given that $X_{j^*} + Y_{j^*} \geq x^*$, is given by

$$

E[X_{j^*}+Y_{j^*} \,|\, X_{j^*}+Y_{j^*} \geq x^]

= \frac{\int_{x^*}^{\infty} z \cdot f_{X_{j^*}+Y_{j^*}}(z) , dz}{P(X_{j^*} + Y_{j^*} \geq x^*)}

$$

Substituting these expressions into the equation for $E[Z]$, we get

$$

E[Z] = \int_{0}^{x^*} z \cdot f_{X_{j^*}+Y_{j^*}}(z) , dz

+ \left(1 - \int_{0}^{x^*} f_{X_{j^*}+Y_{j^*}}(z) , dz\right)

\cdot \frac{\int_{x^*}^{\infty} z \cdot f_{X_{j^*}+Y_{j^*}}(z) , dz}

{\int_{0}^{\infty} f_{X_{j^*}+Y_{j^*}}(z) , dz}

$$

To evaluate this expression, we need to compute the convolution formula for the pdf of $X_j + Y_j$ and find the minimum value $x^*$ of $X_j + Y_j$. Once we know $x^*$, we can compute the integrals above and find the expected value of the minimum of the sum $X_j + Y_j$.

I wonder whether this is correct, or if there is a cleaver way to deal with the minimisation problem. Any ideas?