Let's say we have a countable collection of random variables $X_1, X_2, ...$, in $(\Omega, \mathscr{F}, \mathbb{P})$

Can we define a joint distribution function for all of them ie

$$F_{X_1,X_2, ...}(x_1, x_2, ...)?$$

If not, why?

If so, then if the random variables are independent, do we have

$$F_{X_1,X_2,...}(x_1, x_2, ...) = \prod_{i=1}^{\infty} F_{X_i}(x_i)?$$

If the random variables have pdfs or pmfs, do we have

$$f_{X_1,X_2,...}(x_1, x_2, ...) = \prod_{i=1}^{\infty} f_{X_i}(x_i)?$$

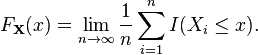

Edit: Is the empirical distribution function here an example?

How about an uncountable collection of random variables $(X_j)_{j \in [0,1]}$?

Can we define $F_{X_j, j \in [0,1]}$?

If the random variables are independent, will product integrals be used?