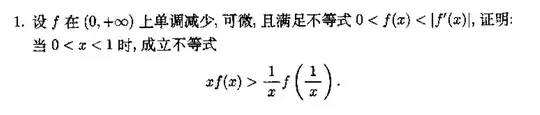

Let $f(x)$ be monotone decreasing on $(0,+\infty)$, such that $$0<f(x)<\lvert f'(x) \rvert,\qquad\forall x\in (0,+\infty).$$

Show that $$xf(x)>\dfrac{1}{x}f\left(\dfrac{1}{x}\right),\qquad\forall x\in(0,1).$$

My ideas:

Since $f(x)$ is monotone decreasing, $f'(x)<0$, hence $$f(x)+f'(x)<0.$$ Let $$F(x)=e^xf(x)\Longrightarrow F'(x)=e^x(f(x)+f'(x))<0$$ so $F(x)$ is also monotone decreasing. Since $0<x<1$, $$F(x)>F\left(\dfrac{1}{x}\right)$$ so $$e^xf(x)>e^{\frac{1}{x}}f\left(\frac1x\right).$$ So we must prove $$e^{\frac{1}{x}-x}>\dfrac{1}{x^2},\qquad0<x<1$$ $$\Longleftrightarrow \ln{x}-x>\ln{\dfrac{1}{x}}-\dfrac{1}{x},0<x<1$$ because $0<x<1,\dfrac{1}{x}>1$ so I can't. But I don't know whether this inequality is true. I tried Wolfram Alpha but it didn't tell me anything definitive.

PS: This problem is from a Chinese analysis problem book by Huimin Xie.