The first has an accuracy of 100% on training set and 84% on test set. Clearly over-fitted.

Maybe not. It's true that 100% training accuracy is usually a strong indicator of overfitting, but it's also true that an overfit model should perform worse on the test set than a model that isn't overfit. So if you're seeing these numbers, something unusual is going on.

If both model #1 and model #2 used the same method for the same amount of time, then I would be rather reticent to trust model #1. (And if the difference in test error is only 1%, it wouldn't be worth the risk in any case; 1% is noise.)

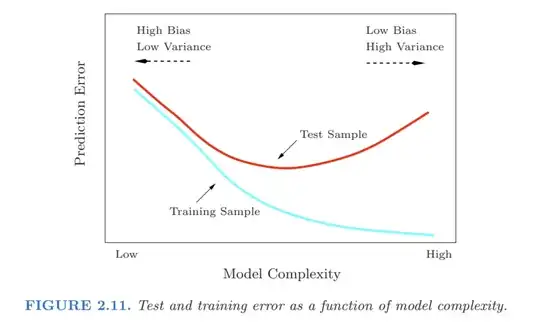

But different methods have different characteristics with regard to overfitting. When using AdaBoost, for example, test error has often been observed not only to not increase, but actually continue decreasing even after the training error has gone to 0 (An explanation of which can be found in Schapire et. al. 1997). So if model #1 used boosting, I would be much less worried about overfitting, whereas if it used linear regression, I'd be extremely worried.

The solution in practice would be to not make the decision based only on those numbers. Instead, retrain on a different training/test split and see if you get similar results (time permitting). If you see approximately 100%/83% training/test accuracy consistently across several different training/test splits, you can probably trust that model. If you get 100%/83% one time, 100%/52% the next, and 100%/90% a third time, you obviously shouldn't trust the model's ability to generalize. You might also keep training for a few more epochs and see what happens to the test error. If it is overfitting, the test error will probably (but not necessarily) continue increasing.