I'm working on a relation classification task for natural language processing and I have some questions about the learning process. I implemented a convolutional neural network using PyTorch, and I'm trying to select the best hyper-parameters.

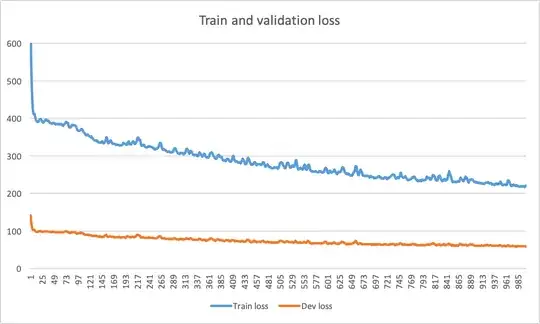

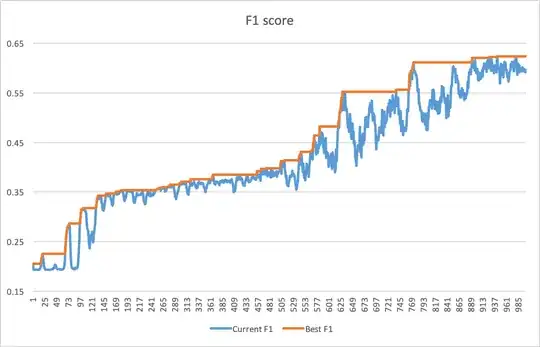

The common behaviour I noticed is that even after 1000 epochs, my validation loss is still slowly decreasing, and my metric (macro F1 score) is slowly increasing. Both the metrics are oscillating, perhaps due to the imbalance of the 4 classes to classify (5%, 3%, 30%, 62%). I'm using Adam, so the learning rate is not set by myself (it is adaptive). I'm using dropout 0.5 after max pooling, and mini batches of size 50.

On books and online tutorials I have seen plots that are so clear in when to stop training. For instance, when the train loss becomes less that the validation loss, or when a plateau is reached in the validation loss. This seems not to be the case, so I'm asking for an advice to more navigated neural network users:

- Why the network is still learning after so many epochs (and so slowly)? It is a reasonable behaviour? Do I need to run the model for 2000, or even 3000 epochs to get the best macro f1 score? It risks to overfit, doesn't it?

- My network is fed by word embeddings and position embeddings only, so I'm wondering if this behaviour can be motivated by (i) a network architecture that is too complex for the task, (ii) a network architecture that is too simple to model the complexity for the task, (iii) the inputs are not so informative to discriminate the classes, so the network learns with difficulty (and thus slowly). My network architecture is not deep: it has an embedding layer + conv layer + max-pool layer + softmax.

- A side question: suppose 1000 epochs are enough. When I need to compare performance of many models (or to compare different hyper-parameter combinations), which score I need to pick and compare? The "last best" f1 score (say at epoch 980) or the last reported score (i.e., the one at epoch 1000)?

Do you have any suggestions? Let me know if something is unclear!

=====

Update

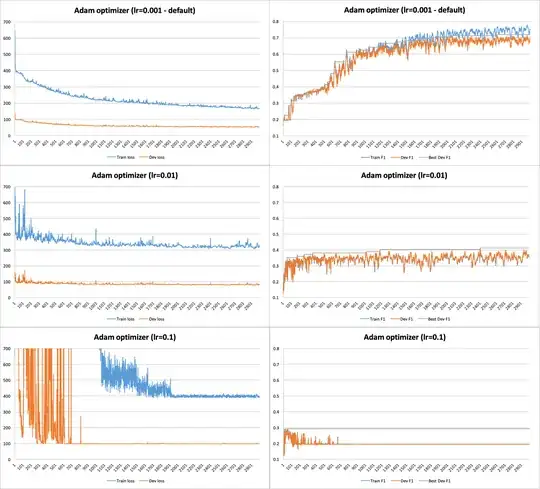

Following the advice by Djib2011 I trained the network with different learning rates, in particular lr=0.001 (the default), lr=0.01, lr=0.1. I trained the network using even more epochs (i.e., 3000), to identify the moment in which I could stop the training. What I noticed is that increasing the learning rate doesn't help, and the best results are given by the default lr=0.001. However it is still unclear when to stop.

Do you have other advices to tackle this problem? Besides learning rates, there could be other issues behind the optimizer (e.g., network with a small capacity)? Thank you in advance!

=====

Update #2

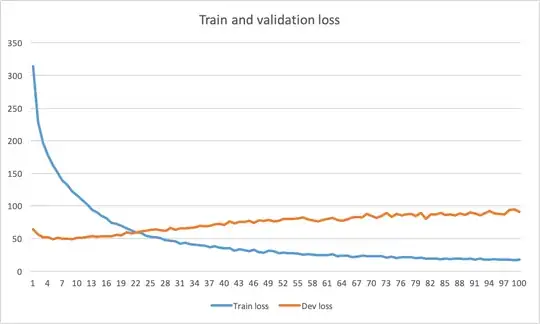

After experimenting with different optimization algorithms (i.e., RMSProp, AdaDelta, Adamax, SGD) with different learning rates and 3000 epochs, I noticed that the behaviour across these different settings was still the same (no convergence, and many epochs with little decreasing training and validation losses).

I thus modified the pytorch implementation by moving the 3 lines: optimizer.zero_grad(), loss.backward(), optimizer.step() inside the (training) batches loop instead of running them after the loop. As a result, this is the edited code that performs training and testing at each epoch:

# Iterate over epochs

for epoch in range(1, n_epochs+1):

train_loss = 0

model.train()

train_predictions = []

train_true_labels = []

# Iterate over training batches

for i, (inputs, labels) in enumerate(train_loader):

inputs, labels = Variable(inputs).to(device), Variable(labels).to(device)

optimizer.zero_grad() # set gradients to zero

preds = model(inputs)

preds.to(device)

# Compute the loss and accumulate it to print it afterwards

loss = loss_criterion(preds, labels)

train_loss += loss.detach()

pred_values, pred_encoded_labels = torch.max(preds.data, 1)

pred_encoded_labels = pred_encoded_labels.cpu().numpy()

train_predictions.extend(pred_encoded_labels)

train_true_labels.extend(labels)

loss.backward() # backpropagate and compute gradients

optimizer.step() # perform a parameter update

# Evaluate on development test

predictions = []

true_labels = []

dev_loss = 0

model.eval()

for i, (inputs, labels) in enumerate(dev_loader):

inputs, labels = Variable(inputs).to(device), Variable(labels).to(device)

preds = model(inputs)

preds.to(device)

loss = loss_criterion(preds, labels)

dev_loss += loss.detach()

pred_values, pred_encoded_labels = torch.max(preds.data, 1)

pred_encoded_labels = pred_encoded_labels.cpu().numpy()

predictions.extend(pred_encoded_labels)

true_labels.extend(labels)

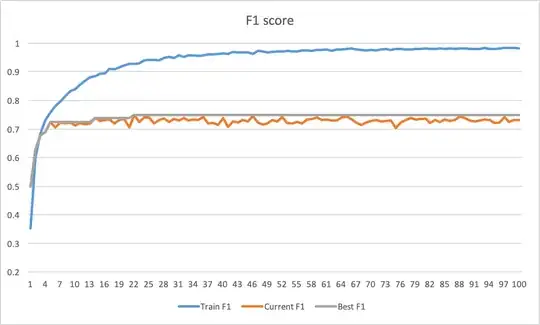

I thus trained again the network using my default configuration (Adam, lr=0.001) and surprisingly I obtained a convergence at epoch 22 (see images below). I think the issue was there, do you agree? Do you have any additional advice? Thanks again!