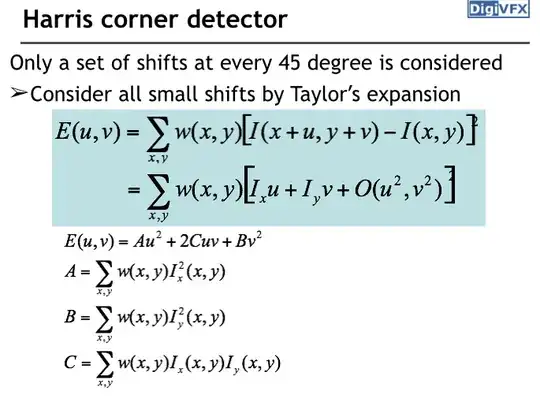

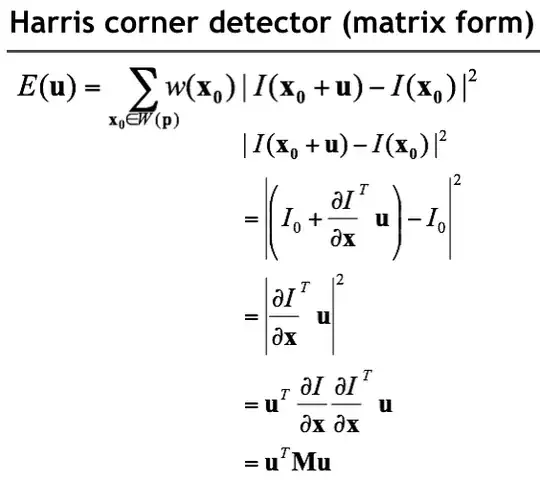

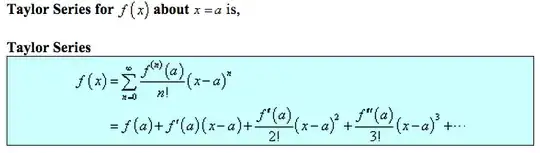

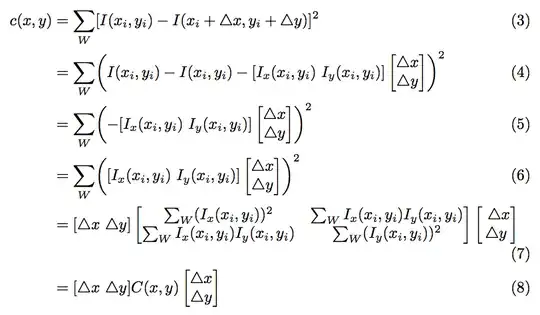

It looks more like an assumption about local linearity of the function when the shifts u and v are small. This description of the Harris corner detector (used in computer vision) is as thorough as the original paper, and yet I don't see how it relates to the Taylor Series expansion. Could someone connect the two? In the image below, $I_x$ refers to the derivative of I wrt x.