I have two questions related to principal component analysis (PCA):

How do you prove that the principal components matrix forms an orthonormal basis? Are the eigenvalues always orthogonal?

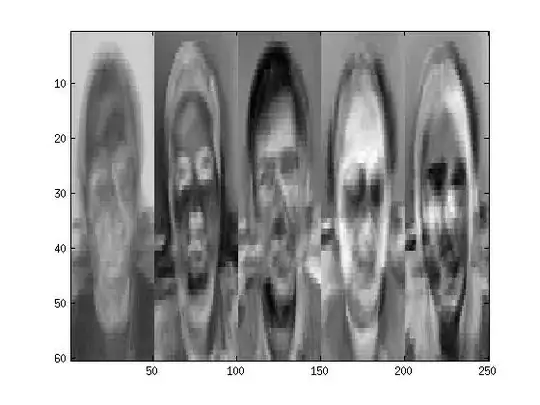

On the meaning of PCA. For my assignment, I have to compute the first 5 principal components for twenty-four $60 \times 50$ images. I've done that. But then it asks me to show those $5$ principal components as images and comment on what I see. I can see stuff, but I don't understand what is important about what I am seeing. Assuming I am doing everything right, what I should I be seeing/commenting on?