So I was trying to come up with Inverse Normal CDF (because reasons*), though I'm potentially quite over my head. Anyways with some not so working GPT given samples I went to geogebra and played with formulas until I got to "looks close enough to what I think it should look". I got this:

$$ b(x) = \frac{(x - 0.5) \cdot \frac{1}{s}}{\sqrt{\frac{1}{4s} - (x - 0.5)^2 \cdot \frac{1}{s}}} $$

Now I wanted to convert sigma to s, so with help of "Reasoning GPT", I got to this (which is not great but something):

$$ s = \frac{2}{\pi \sigma^2} $$

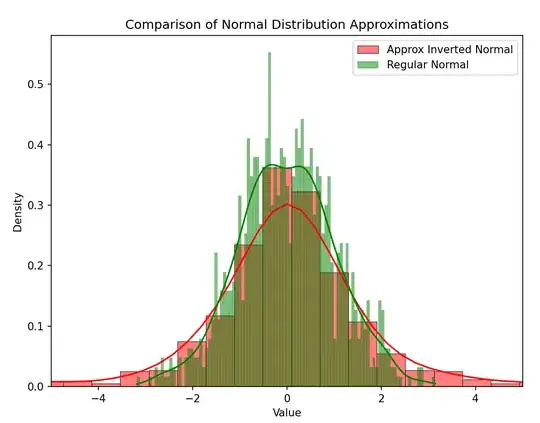

Then I went to plot it (well using it to map uniform random val to normal random val, which started all this) against built in normal distribution in NumPy, and there is a peculiarity... It seems that approximation "clusters significantly". Both plots had 1k data points, and requested buckets was 100, but seems my approximation function is tending to hit just certain values from expected range. Even though distribution shape seems ok (but it is also visible that ${\sigma \to s}$ mapping is so so, but whatever).

Now I like shape, I like that equation for approximation seems relatively simple. But I can not understand why is it clustering like that. I though that maybe it some floating point math or what not. Though GPT says it shouldn't be, but if it's right, what a hell could it be? I believe function should be smooth and monotonic, so how come it seems to be mapping multiple inputs to same output?!

Here is my geogebra thing that I used to come up with approximation: https://www.geogebra.org/graphing/atn3axmb

* I'm working on a game and doing some shader stuff where I wan all kinds of random numbers, hence I wanted to write function to map output of (hopefully) uniform PRNG I have, to normally distributed on. I'm not mathematician (thus I'm not event 100% sure that the name Inverse Normal CDF applies to what I'm doing, but it seems so, and AI agreed too, so hope I'm not lying in my title :) ) so was kinda surprised that this Inverse Normal CDF was so complicated with integrals and what not... Unable to find something simple tried to come up with something with some AI help.

Python used for plotting:

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

import math

def approx_inverted_normal(mean, std, size=10000):

s = 2. / (math.pi * std*2.) # Convert std to steepness parameter s using s = 2 / (pi std^2)

u = np.random.rand(size) # Generate uniform random numbers in (0,1)

numerator = (u - 0.5) * (1. / s)

denominator = np.sqrt((1. / (4. * s)) - (u - 0.5)**2. * (1. / s))

b = numerator / denominator

return mean + std * b

size = 1000

mean = 0

std = 1

data_approx = approx_inverted_normal(mean, std, size)

data_normal = np.random.normal(mean, std, size)

plt.figure(figsize=(8, 6))

sns.histplot(data_approx, bins=100, color='red', label='Approx Inverted Normal', stat='density', kde=True, alpha=0.5)

sns.histplot(data_normal, bins=100, color='green', label='Regular Normal', stat='density', kde=True, alpha=0.5)

plt.title("Comparison of Normal Distribution Approximation")

plt.xlim(mean - 5std, mean + 5std)

plt.xlabel("Value")

plt.ylabel("Density")

plt.legend()

plt.show()

qnorm()in R. The source code for that illustrates the formulae used (essentially polynomials of degree $6$) and can be found at https://github.com/SurajGupta/r-source/blob/master/src/nmath/qnorm.c – Henry Mar 29 '25 at 22:22