I'm trying to solve a problem that asks me to find me the p.d.f of $Y = X_1 + X_2$, where both $X_1$ and $X_2$ are uniformly distributed over $[0,1]$. In order to do that I need to solve this:

$$ \int_{-\infty}^{\infty} f_{X_1}(x) f_{X_2}(y-x) d x $$

but I have trouble understanding how to find the limits of integration.

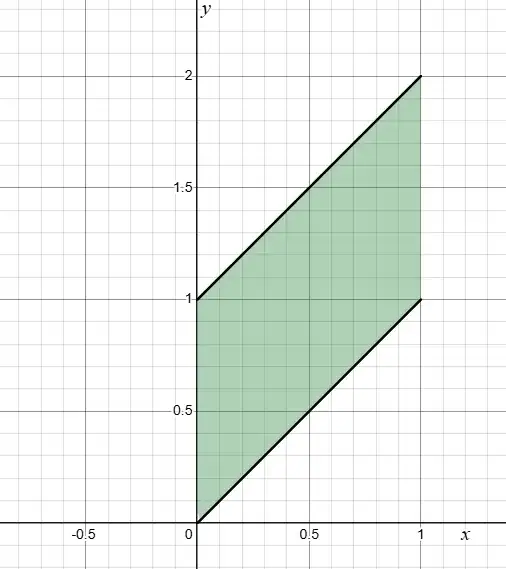

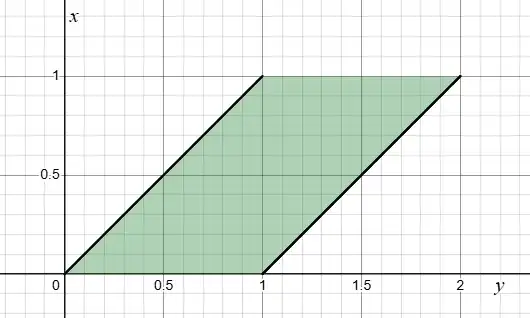

I know that $Y \in [0,2]$ and if I let $X_1 = x$, then $X_2 = y-x$. Then I think I have to solve two cases (but not sure why).

Case I: $0 \leq y \leq 1$ I think that I have to solve $0 \leq x \leq 1$ and $0 \leq y-x \leq 1$

Case II: $1 \leq y \leq 2$.

It feels like there should be an easy way to do this, but I'm missing something essential that I need to proceed. I really don't understand how to approach finding the limits. I should note that I'm not great at working with inequalities, so that might be the reason why I am stuck.

Thankful for any advice.

EDIT

$X_1$ and $X_2$ are i.i.d.