We start with $i=6$ original balls. At time $t=1,2,3\ldots$ the probability of selecting

one of the original balls is $i/(i-1+t)$; the number of balls in the urn

(original plus added) is $i-1+t$ before the $t$-th selection and $i+t$ after the $t$-th selection.

If the probability of having seen $k\ge 0$ of the original balls

after pick $t$ is denoted by $P_{t,k}$, the branching in the tree of all possible experiments

is

$$

P_{t,k} = P_{t-1,k-1}\frac{i}{i-1+t}+P_{t-1,k}(1-\frac{i}{i-1+t}).

$$

It's easy to build a full table with this recurrence numerically.

The initial values are $P_{0,0}=1$, $P_{0,k\neq 1}=0$.

In the spirit of the coupon collector problem, the question targets having seen $k$ distinct

of the original balls, $1\le k\le i$. [One could perhaps run an inclusion-exclusion filter across the aforementioned $P$.]

We regard the number of original balls that appear repeatedly as members of

the non-interesting set. So the recurrence is modified to a less favorable branching ratio to

select one more ball not out of $i$ but out of $i-(k-1)$ if already $k-1$ distinct of

the original balls have been picked:

$$

P_{t,k} = \left\{

\begin{array}{ll}

P_{t-1,k-1}\frac{i-(k-1)}{i-1+t}+P_{t-1,k}(1-\frac{i-k}{i-1+t}),& i-(k-1)\ge 0; \\

P_{t-1,k},& i-(k-1)< 0.\\

\end{array}

\right .

$$

The time development is for $i=6$ like this:

t=1 k=1 P=1 = 1.00000

t=1 k=2 P=0 = 0.00000

t=1 k=3 P=0 = 0.00000

t=1 k=4 P=0 = 0.00000

t=1 k=5 P=0 = 0.00000

t=1 k=6 P=0 = 0.00000

t=2 k=1 P=2/7 = 0.28571

t=2 k=2 P=5/7 = 0.71429

t=2 k=3 P=0 = 0.00000

t=2 k=4 P=0 = 0.00000

t=2 k=5 P=0 = 0.00000

t=2 k=6 P=0 = 0.00000

t=3 k=1 P=3/28 = 0.10714

t=3 k=2 P=15/28 = 0.53571

t=3 k=3 P=5/14 = 0.35714

t=3 k=4 P=0 = 0.00000

t=3 k=5 P=0 = 0.00000

t=3 k=6 P=0 = 0.00000

t=4 k=1 P=1/21 = 0.04762

t=4 k=2 P=5/14 = 0.35714

t=4 k=3 P=10/21 = 0.47619

t=4 k=4 P=5/42 = 0.11905

t=4 k=5 P=0 = 0.00000

t=4 k=6 P=0 = 0.00000

t=3800 k=1 P=2/2209677920472337 = 0.00000

t=3800 k=2 P=18995/2209677920472337 = 0.00000

t=3800 k=3 P=48095340/2209677920472337 = 0.00000

t=3800 k=4 P=45654501495/2209677920472337 = 0.00002

t=3800 k=5 P=17330448767502/2209677920472337 = 0.00784

t=3800 k=6 P=2192301769089003/2209677920472337 = 0.99214

t=5000 k=1 P=2/8706626751739917 = 0.00000

t=5000 k=2 P=24995/8706626751739917 = 0.00000

t=5000 k=3 P=11897620/1243803821677131 = 0.00000

t=5000 k=4 P=14863101785/1243803821677131 = 0.00001

t=5000 k=5 P=7425605651786/1243803821677131 = 0.00597

t=5000 k=6 P=412121113674123/414601273892377 = 0.99402

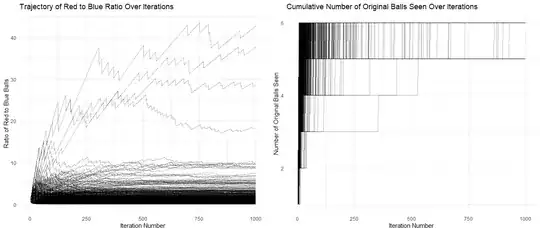

The expectation values for the times of having picked $k$ distinct original balls are $E_k=\sum_t tP_{t,k}$.

Summing over the first $t\le 80$ these partial sums are:

k= 1 E=2.49960

k= 2 E=12.37798

k= 3 E=61.62654

k= 4 E=312.69547

k= 5 E=1116.35886

k= 6 E=1734.44155

Summing over the $t\le 160$ of these partial sums give:

k= 1 E=2.49995

k= 2 E=12.46728

k= 3 E=67.92520

k= 4 E=484.55048

k= 5 E=3054.32631

k= 6 E=9258.23077

Summing over the $t\le 1000$ of these partial sums give:

k= 1 E=2.50000

k= 2 E=12.49911

k= 3 E=73.81131

k= 4 E=1001.90220

k= 5 E=26931.19683

k= 6 E=472478.09050

Numerically this looks like conjectured expectation times of $2.5$, $12.5$, $75?$...

(I have no formal proof of that, only lower limits. I did not try to speed up convergence with any type of Levin/Wynn-algorithm.)

The question 1 "how many more iterations are needed.." sound like that is what was asked (??).

Of course, an infinity of more iterations are needed to ensure (!) that each

of the remaining $i-k$ balls are also at least once.

Maple program:

# probability of having seen k distinct original balls

# starting from i original balls after step t.

P := proc(i,k,t)

option remember;

if t=0 then

if k=0 then

1;

else

0 ;

end if

else

if i-(k-1) >= 0 then

procname(i,k-1,t-1)*(i-k+1)+procname(i,k,t-1)*(t+k-1) ;

%/(i-1+t) ;

else

procname(i,k,t-1) ;

end if;

end if;

end proc:

i := 6 ;

# cp are the sum of the probabilities (expectation values....)

cp := [seq(0,k=1..i)] ;

# loop over the time steps

for t from 1 do

for k from 1 to i do

p := P(i,k,t) ;

if t < 30 or modp(t,200)=0 then

printf("t=%d k=%d P=%a = %.5f\n",t,k,p,evalf(p)) ;

end if;

pold := op(k,cp) ;

# compute expectatino value by adding time times probability

cp := subsop(k=pold+t*p,cp) ;

end do:

if t < 30 or modp(t,200)=0 then

for k from 1 to i do

printf("k= %d E=%.5f\n",k,evalf(op(k,cp))) ;

end do:

end if;

end do:

About Question 2: If already $\hat k$ of the original balls have been selected (coupon collector, each at least one) after $n$ picks,

what is the probability of collection the other $i-\hat k$ at some time in the future?

The answer to this is given by restarting the experiment with $i+n$ in total and calculating

the requested $P^{(2)}_{j,i}$ with the equivalent algorithm:

$$

P^{(2)}_{j,k} = \left\{

\begin{array}{ll}

P^{(2)}_{j-1,k-1}\frac{i-(k-1)}{n+i-1+j}+P^{(2)}_{j-1,k}(1-\frac{i-k}{n+i-1+j}),& i-(k-1)\ge 0; \\

P^{(2)}_{j-1,k},& i-(k-1)< 0.\\

\end{array}

\right .

$$

starting at $P^{(2)}_{0,k=\hat k}=1$, $P^{(2)}_{0,k\neq \hat k}=0$.