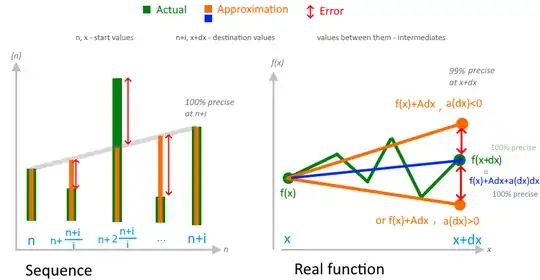

Sequence

If we consider a sequence, we can find how any next element is changed relatively to the given as $\{n+1\}-\{n\}$.

We can also find how every given + $i$, element is different from the given, i.e. $\{n+i\}-\{n\}$, and if are infested of inspecting the difference only between $n+i$ and $n$ that's fine.

But, if, we want to find the difference between sequence elements, which indexes difference is lower than $i$, i.e. with higher "resolution", using $n+i$ equation, we can only use an approximation, for example, by dividing $\{n+i\}-\{n\}$ by $i$, and that will be a value that we will need to add to $n$th element $i$ times, to get exactly $n+i$ value, AND also approximate values of elements with indexes between $n$ and $n+i$.

In this case approximation means only that values of elements, with indexes between $n$ and $n+i$ may be wrong, right?

Real function

In case of real function, we find the difference between the values, which corresponding arguments values is infinitely small and then divide that difference between such values by infinitely small corresponding arguments difference (I do not really understand for what, since we consider it sort of the smallest undividable, but I have assumptions, so let me ask it in different question), getting same linear approximation, but now between $f(x)$ and $f(x+\delta x)$.

That means, that we divide the infinitely small function range ($\delta y$) by infinitely small argument difference ($\delta x$) parts (that may be greater than function difference, though) to know what value we should add to $f(x)$ $\delta x$ times to get $f(x+\delta x)$ value AND approximate values between them with $\delta x$ step.

The question

The problem is that $\lim_{\delta x->0} \dfrac{f(x+\delta x)-f(x)}{\delta x}=\lim_{\delta x -> 0}(A + \alpha(\delta x))=A$, where $\alpha(\delta x)$ is infinitely small at $\delta x->0$, and then

$$f(x+\delta x) = f(x) + A\delta x + \fbox{α(δx)δx}$$

Do I understand correctly, that in case of derivative, by approximation it means that, unlike the sequences, where $n+i$ will be 100% precise and between $n$ and $n+i$ may be wrong, for real function, values between $f(x + \delta x)$ and $f(x)$ are also may be wrong, but the destination value $f(x + \delta x)$ will be wrong too on $α(δx)δx$ (smaller or greater), which is, however infinitely small, so the error is infinitely small, and the correctness is infinitely close to 100%, yet not 100%?

a(dx)dxis an extra, that makes an error, but actually it seems to be vice-versa a fix, with that function should be 100% correct at $x+dx$. And if we consider only $f(x)+f'(x)dx$ as You wrote it will have an errora(dx)dx, and, probably at the same time I thought that this monomial always is reduce unlike the $f'(x)dx$, during taking the limit, however, they are reduced both – isagsadvb Jun 26 '24 at 12:32