In school, we are usually taught the following point:

- If you have few data points, if you calculate the variance of these data points, the variance will be very large

- As the number of data points increase, the variance will become smaller

Supposedly, this is justified by the "Consistency" property of estimators. That is, since the Variance is "statistically consistent" - as the number of points used to calculate the Variance increases, it will become closer to the "true value" (Show that sample variance is unbiased and a consistent estimator)

This being said, I have the following question:

Suppose someone wants to measure how many baskets I can score in basketball from the three point line. Since I am not a basketball player, I am likely to miss a lot of shots. However, suppose I luckily happen to score 4 times out of the first 5 times - in this situation, my variance would be quite small. But the more I shoot, I start to miss more baskets and then my variance increases.

Thus, in this case, it is possible that fewer data points might underestimate the true Variance.

This being said, can someone please explain why the variance calculated on a small number of data points is always believed to be much larger than the actual variance ... when in fact it could be smaller than the actual variance?

Thanks!

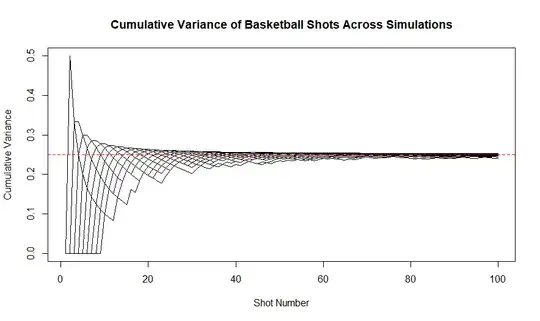

EXTRA: An R simulation which shows the cumulative variance of a basketball being shot 100 times with success probability = 0.5 (i.e. variance of first n shots, first n+1 shots, first n+2 shots, etc.). This entire simulation is then repeated 100 times and then visualized. Note that the variance after the first shot is always 0 (I am not sure if this is correct).

n_shots <- 100

prob_success <- 0.5

n_simulations <- 100

cumulative_variances <- matrix(nrow = n_shots, ncol = n_simulations)

set.seed(123)

for (i in 1:n_simulations) {

shots <- rbinom(n_shots, 1, prob_success)

cumulative_variances[,i] <- sapply(1:n_shots, function(j) if(j > 1) var(shots[1:j]) else 0)

}

plot(1:n_shots, cumulative_variances[,1], type = "l", ylim = range(cumulative_variances),

xlab = "Shot Number", ylab = "Cumulative Variance",

main = "Cumulative Variance of Basketball Shots Across Simulations")

for (i in 2:n_simulations) {

lines(1:n_shots, cumulative_variances[,i])

}

abline(h = 0.25, col = "red", lty = 2)