This is a follow-up to my other question here . Define ${\mathfrak a}:= (T-N-1)/2 \in {\mathbb N}$ for $T > N+1$ and both $N,T \in {\mathbb N} $. Go to the link for the definition of the prefactor ${\mathfrak P}_{N,T}$. As explained in the aforementioned link the Laplace transform of the marginal pdf of the eigenvalues of real Wishart matrices reads:

\begin{eqnarray} E\left[ e^{-s \lambda} \right] = \frac{{\mathfrak P}_{N,T}}{N} 2^{\binom{N+1}{2} + N {\mathfrak a}} \cdot \sum\limits_{J=1}^N \prod\limits_{j=1}^N \left( \partial_i + \cdots + \partial_N \right)^{\mathfrak a} \cdot \prod\limits_{1 \le i < j \le N} \left( \partial_i + \cdots + \partial_{j-1} \right) \cdot \left. \frac{1}{x_1 x_2 \cdots x_N} \right|_{x_j \rightarrow j + 2 s 1_{j \ge J}} \tag{1} \end{eqnarray}

By using the techniques from the aforementioned link we obtained a closed form result for $N=3$ and then we inverted it to obtain the marginal pdf of the eigenvalues $\rho_3(\lambda)$. It reads:

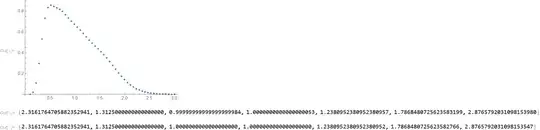

\begin{eqnarray} &&\rho_3(\lambda) = \frac{T}{2} \frac{{\mathfrak P}_{N,T}}{N} 2^{\binom{N+1}{2} + N {\mathfrak a}} \sum\limits_{\begin{array}{lll} k_1=0,\cdots,{\mathfrak a} \\ k_2=0,\cdots2 {\mathfrak a} - k_1 \\ \theta=1,2 \end{array}} \binom{{\mathfrak a}}{k_1} \binom{2{\mathfrak a}-k_1}{k_2} \left( \right. \\ && \left. (-1)^{3 {\mathfrak a}+3} (k_2+3-\theta)!(3{\mathfrak a}-k_1-k_2)! \underline{\sum\limits_{l={\mathfrak a}}^{k_1+\theta} \binom{k_1+\theta}{l} {\mathfrak A}_{k_1,k_2}^{(\theta)} \cdot \left( - \frac{T \lambda}{2}\right)^l }\cdot e^{-\frac{1}{2} \lambda T} + \right. \\ && \left. (-1)^{3 {\mathfrak a}+3} (k_1+\theta)! (3{\mathfrak a}-k_1-k_2)! \underline{\sum\limits_{l={\mathfrak a}}^{k_2+3-\theta} \binom{k_2+3-\theta}{l} {\bar {\mathfrak A}}_{k_1,k_2}^{(\theta)} \cdot \left( - \frac{T \lambda}{2}\right)^l }\cdot e^{-\frac{2}{2} \lambda T} + \right. \\ && \left. (-1)^{k_2+\theta+1} (k_1+\theta)! (3{\mathfrak a}-k_1-k_2)! \underline{\sum\limits_{l={\mathfrak a}}^{k_2+3-\theta} \binom{k_2+3-\theta}{l} (1+3 {\mathfrak a}-k_1-k_2)^{(k_2+3-\theta-l)} \cdot \left( - \frac{T \lambda}{2}\right)^l }\cdot e^{-\frac{2}{2} \lambda T} \right. \\ && \left. \right) \tag{2} \end{eqnarray} Below there is a Mathematica code snippet that does the usual sanity checks, i.e. verifies that the (positve) moments of the pdf in $(2)$ are correct and then plots the pdfs in question for different values of $T$ in an ascending order from violet to red respectively. Here we go:

(*The same as above for N=3.*)

Clear[mLpTf]; NN = 3; k1 =.; lmb =.; k2 =.; k3 =.; s =.; T =.;

mLpTf[T_] :=

With[{aa = (T - NN - 1)/2},

Pfct[NN, T]/NN 2^(Binomial[NN + 1, 2] + NN aa)

Sum[ Binomial[aa, k1] Binomial[2 aa - k1, k2] (

(k1 + th)!/(1 + 2 s/T)^(

k1 + 1 + th) (k2 + 3 - th)!/(2 + 2 s/T)^(

k2 + 1 + 3 - th) (k3)!/(3 + 2 s/T)^(k3 + 1) +

(k1 + th)!/(1)^(k1 + 1 + th) (k2 + 3 - th)!/(2 + 2 s/T)^(

k2 + 1 + 3 - th) (k3)!/(3 + 2 s/T)^(k3 + 1) +

(k1 + th)!/(1)^(k1 + 1 + th) (k2 + 3 - th)!/(2)^(

k2 + 1 + 3 - th) (k3)!/(3 + 2 s/T)^(k3 + 1)) /.

k3 :> 3 aa - k1 - k2, {k1, 0, aa}, {k2, 0, 2 aa - k1}, {th, 1,

2}]];

(*Sanity check. do positive moments match?*)

ll = Table[

Normal@Series[mLpTf[NN + 1 + 2 Xi], {s, 0, 4}], {Xi, 0, 10}];

CoefficientList[ll, s] -

Table[With[{T = NN + 1 + 2 Xi}, {1, -1,

1/2! (1 + 4/T), -1/3! (1 + 22/T^2 + 12/T),

1/4! (1 + 164/T^3 + 126/T^2 + 24/T)}], {Xi, 0, 10}]

(Invert Laplace transform.)

Clear[mrho]; a1 =.; a2 =.; a3 =.;

mrho[T_, lmb_] :=

With[{aa = (T - NN - 1)/2},

T/2 Pfct[NN, T]/NN 2^(Binomial[NN + 1, 2] + NN aa)

Sum[ Binomial[aa, k1] Binomial[2 aa - k1, k2] (

(-1)^(

3 aa + 3) (k2 + 3 - th)! (3 aa - k1 - k2)! Sum[

Binomial[k1 + th,

l] (D[1 /( (a1 - 2)^(1 + k2 + 3 - th) (a1 - 3)^(

1 + 3 aa - k1 - k2)), {a1, k1 + th - l}] /.

a1 :> 1) (-T/2 lmb)^l, {l, aa, k1 + th}] E^(-(1/2)

1 lmb T) +

(-1)^(

3 aa + 3) (k1 + th)! (3 aa - k1 - k2)! Sum[

Binomial[k2 + 3 - th,

l] (D[1/((a2 - 1)^(1 + k1 + th) (a2 - 3)^(

1 + 3 aa - k1 - k2)), {a2, k2 + 3 - th - l}] /.

a2 :> 2) (-T/2 lmb)^l, {l, aa, k2 + 3 - th}] E^(-(1/2)

2 lmb T) +

(-1)^(-k2 - th - 1) (k1 + th)! (3 aa - k1 - k2)! Sum[

Binomial[k2 + 3 - th, l] Pochhammer[1 + 3 aa - k1 - k2,

k2 + 3 - th - l] (-T/2 lmb)^l, {l, aa, k2 + 3 - th}] E^(-(

1/2) 2 lmb T) ), {k1, 0, aa}, {k2, 0, 2 aa - k1}, {th, 1,

2}]];

(Test whether moments match.)

Xi = RandomInteger[{1, 15}];

mrho[NN + 1 + 2 Xi, lmb] // Expand;

Table[Integrate[lmb^p (%), {lmb, 0, Infinity}], {p, 0, 4}] -

With[{T = NN + 1 + 2 Xi}, {1, 1, 1 + 4/T, 1 + 22/T^2 + 12/T,

1 + 164/T^3 + 126/T^2 + 24/T}]

(Plot the marginal densities.)

num = 10;

lmb = Array[# &, 50, {0, 5}];

ListPlot[Table[

Transpose[{lmb, mrho[NN + 1 + 2 Xi, lmb]}], {Xi, 0, num}],

PlotStyle -> Table[ColorData["Rainbow", i/(num)], {i, 0, num}],

Joined :> True, PlotMarkers -> Automatic,

AxesLabel :> {"lambda", "pdf"}, PlotLabel :> "N=" <> ToString[NN]]

Clearly the result is correct because the spectral moments match those being computed in a different way. However, this result does not match with equation (1.8) page 7 in

Bun, Joël; Bouchaud, Jean-Philippe; Potters, Marc, Cleaning large correlation matrices: tools from random matrix theory, Phys. Rep. 666, 1-109 (2017). ZBL1359.15031.

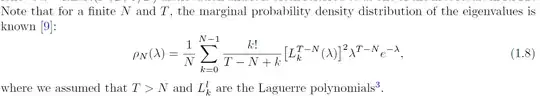

where the authors give a result that is supposed to be valid for all values of $N$ and $T$, a result that is incorrect (for various reasons-- firstly, their function can be bi-modal which is not how it should be and secondly it is not even normalized to unity). The authors give the following expression for the marginal pdf of the eigenvalues:

In view of all this my question is two fold, firstly where does the result from the aforementioned paper come from and can it be salvaged? The second question is obvious, i.e using our approach can we come up with a closed form expression for the marginal pdf of the eigenvalues for all values of $N$ and $T$.