I had a question about Sheldon Axler's proof of Theorem 5.21 in his book, Linear Algebra Done Right.

I checked this previous post, and had no trouble understanding the substitution part where $T$ is substituted for z.

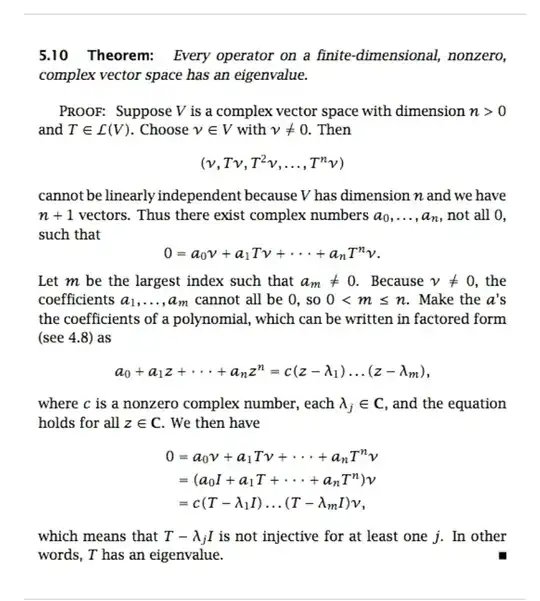

This picture is from an older edition of the textbook, but it's the same proof.

My question is:

In the second line, Axler appears to choose any arbitrary non-zero vector $ v \in V $, and shows that at least one $(T - \lambda_j I) $ satisfies $ (T - \lambda_j I) v = \vec{0} $.

In other words, this proof appears to argue that for all non-zero vector $ v \in V $, there is at least one $(T - \lambda_j I) $ satisfies $ (T - \lambda_j I) v = \vec{0} $.

But this is clearly not true, as there are plenty of counterexamples even on $ C^2$.

Say, $ T(x,y) = [(3+5i)x, (1+2i)y] $, and choose $ v = ( 1+ i, 1-i) $. Then there is no single eigenvalue for that particular combination.

I know this proof is about the **existence ** of a particular (eigenvalue, eigenvector) combination, and not a proof about an eigenvalue existing for all arbitrary eigenvectors.

But the second line of the proof appears to claim otherwise.