Let $\mathcal P$ be the set of probability mass functions (pmfs) on $\mathbb Z_{>0}$, i.e. for $p=(p(x))_{x\in\mathbb Z_{>0}}\in\mathcal P$ we have $p\ge 0$ and $\sum_{x=1}^\infty p(x)=1$. Let $H(p)=-\sum_{x=1}^\infty p(x)\ln(p(x))$ be the entropy and $E(p)=\sum_{x=1}^\infty p(x)x$ the expectation. Further, let $s(p)=|\{x\in\mathbb Z_{>0}:p(x)>0\}|$ be the size of the support of $p$.

For $s\in\mathbb Z_{>0}$ let $\mathcal P_s=\{p\in\mathcal P:s(p)=s\}$, and further let $\mathcal P_\infty=\{p\in\mathcal P:s(p)=\infty,E(p)<\infty\}$.

Question: What are the best bounds for $r(s)=\sup_{p\in\mathcal P_s}H(p)/E(p)$?

Motivation: I want good upper bounds for the entropy in terms of the support size and the expectation.

Background: The question only makes sense if we consider strictly positive random variables, otherwise $r(s)$ would be infinite, which can be seen by taking limits towards the one-point mass on $0$.

For this question we can assume that $p$ is supported on $\{1,\dots,s\}$, respectively $\mathbb Z_{>0}$ for $s=\infty$, and non-increasing, since ordering the weights minimizes the expectation while preserving the entropy.

For $s<\infty$ we have $r(s)\le\ln(s)$ because $H(p)\le\ln(s)$ is maximal for the uniform distribution and $E(p)\ge 1$. Of course, with the uniform distribution we also get a lower bound, namely $r(s)\ge 2\ln(s)/(s+1)$, which is not tight because the entropy is stationary at the uniform distribution while the expectation is not. Also, we know that the supremum is attained due to continuity. Of course, identifying the maximizers would be highly desirable.

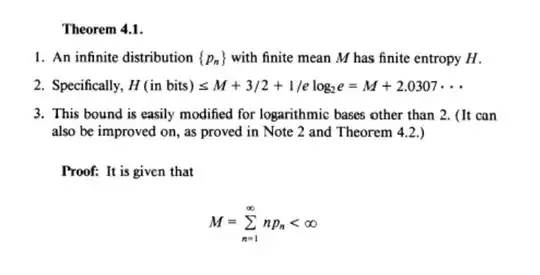

For $s=\infty$ it is known that $H(p)=E(p)=\infty$ is possible, as discussed here. As can be seen here, there are also quite a few follow-up questions. Unfortunately, I am not convinced by the given answer, and am still not aware of an answer to the question if $H(p)<\infty$ for all $p\in\mathcal P_\infty$. Should this be true, we may of course still have $r(\infty)=\infty$, and in any case explicit maximizing sequences would be highly desirable.

Finally, a similar question regarding a lower bound can be found here.

Update: A limiting argument directly yields that $r(s+1)\ge r(s)$. As discussed here, we have $H(p)\le\ln(E(p)+0.5)+1$ given by Theorem 8 in this preprint. The map $f(x)=(\ln(x+0.5)+1)/x$ is decreasing on $[1,\infty)$, with $f(x)=1$ for $x\approx 1.858$. Since we can assume that $p$ is non-increasing, we have $E(p)\le\frac{1+s}{2}$.

We clearly have $r(1)=0$ and a discussion of $f(p_1)=\frac{H(p_1)}{p_1+2(1-p_1)}$ gives the maximizer $p_1=\frac{1}{2}(\sqrt 5-1)\approx 0.618$, the expectation $E(p)\approx 1.382$ and $r(2)\approx 0.481$. For $s=3$ we fix $\mu\in(1,2]$ and consider $p_1\ge p_2\ge p_3\ge 0$ with $p_1+p_2+p_3=1$ and $E(p)=\mu$. Set $p_3=x$ and observe that $p_1=2-\mu+x$, $p_2=\mu-1-2x$, and that $\max(0,\frac{2}{3}\mu-1)\le x\le\frac{1}{3}(\mu-1)$. The derivative $\ln(\frac{p_2^2}{p_1p_3})$ of the entropy on this restriction is decreasing with exactly one root $x=\frac{1}{2}\mu-\frac{1}{3}-\frac{1}{6}\sqrt{4-3(2-\mu)^2}$. Numerical evaluation gives \begin{align*} p_1&\approx 0.544\\ p_2&\approx 0.296\\ p_3&\approx 0.161\\ E(p)&\approx 1.617\\ r(3)&\approx 0.609. \end{align*}