I was going through the derivation of subgradient of the nuclear norm of a matrix from an old homework of a Convex Optimization course (CMU Convex Optimization Homework 2 - Problem 2).

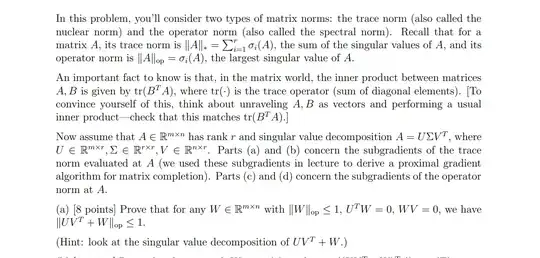

The setup is as follows: $A$ is a $m$ by $n$ matrix ($A \in \mathbb{R}^{m \times n}$) with rank $r$ and has singular value decomposition $A = U \Sigma V^T $, where $U \in \mathbb{R}^{m \times r}$, $\Sigma \in \mathbb{R}^{r \times r} $, and $V \in \mathbb{R}^{n \times r} $.

The first part of the proof states that for any $W \in \mathbb{R}^{m \times n} $, with $\Vert W \Vert _{op} \leq 1$, $U^T W = 0$ and $WV = 0$, we have that $\Vert UV^T + W \Vert _{op} \leq 1$. It also suggests that we look at singular value decomposition of $ UV^T + W $ to prove this statement.

In my first attempt, I noticed that $ U^T(UV^T + W)V = I $ and after that, $ UV^T + W = (U^T)_{left}^{-1}IV_{right}^{-1}$ where $(U^T)_{left}^{-1}$, and $V_{right}^{-1}$ are the left inverse of $U^T$ and right inverse of $V$ respectively, and so the singular values of $UV^T + W$ must be $1$. However, this is wrong because $U^T$ and $V$ need not have these left and right inverses.

Can anyone guide me in the right direction to prove this first part using singular value decomposition as suggested? Any help is much appreciated! Apologies if the notations are too messy!