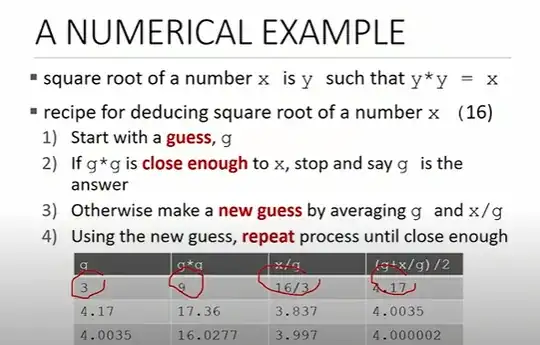

I was watching a video lecture from the series Introduction to Computer Science and Programming using Python (see here). It presents an algorithm for computing the square root of a number $x$. It starts with an initial guess $g$, and then taking the average $$\frac{g+\tfrac xg}{2},$$ and repeating. Why did they not take a simple average like $\frac{g+x}{2}$? Here is a screenshot:

Also Any resources which can help to improve my math's understanding related to tackling kind of math problems would also be really helpful