Define $f(x, y) = \sqrt{|x + y|} - \sqrt{|x-y|}$. Then we want to prove that

\begin{align*}

\mathbb{E}[f(X, Y)] \ge 0

\end{align*}

where $X, Y$ independent and uniformly distributed over the set of values $\{x_1, \cdots, x_n\}$.

[Remark 1: I know the IMO is motivated to use techniques only available at the secondary school level, but the probabilistic notation allows me to naturally represent a bunch of summation notations in a succinct form, and can be translated easily to the summation notation in the original problem.]

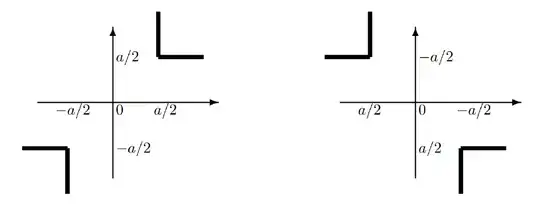

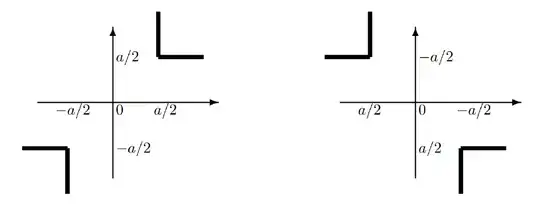

First, let's observe the region in the Cartesian plane where $f(x, y) \ge a$ and $f(x, y) \le -a$, respectively:

And therefore

\begin{align*}

\mathbb{P}(f(X, Y) \ge a) &= \mathbb{P}\left(X \ge \frac{a}{2}, Y \ge \frac{a}{2}\right) + \mathbb{P}\left(X \le -\frac{a}{2}, Y \le -\frac{a}{2}\right) \\

&= \mathbb{P}\left(X \ge \frac{a}{2}\right)^2 + \mathbb{P}\left(X \le -\frac{a}{2}\right)^2

\end{align*}

and

\begin{align*}

\mathbb{P}(f(X, Y) \le a) &= \mathbb{P}\left(X \ge \frac{a}{2}, Y \le -\frac{a}{2}\right) + \mathbb{P}\left(X \le -\frac{a}{2}, Y \ge \frac{a}{2}\right) \\

&= 2\mathbb{P}\left(X \ge \frac{a}{2}\right) \left(X \le -\frac{a}{2}\right)

\end{align*}

Then, we need the lemma that

\begin{align*}

\mathbb{E}[A] = \int_0^\infty [\mathbb{P}(A \ge a) - \mathbb{P}(A \le -a)]da

\end{align*}

which can be proved by noticing that $\mathbb{E}[A] = \mathbb{E}[A^+] - \mathbb{E}[A^-]$, where $A^+ = \max(A, 0)$ and $A^- = -\min(A, 0)$ and then applying the proof for the tail sum formula. Letting $A = f(X, Y)$,

\begin{align*}

\mathbb{E}[f(X, Y)] &= \int_0^\infty[\mathbb{P}(f(X, Y) \ge a) - \mathbb{P}(f(X, Y) \le -a)]da \\

&= \int_0^\infty\left[\mathbb{P}\left(X \ge \frac{a}{2}\right) - \mathbb{P}\left(X \le -\frac{a}{2}\right)\right]^2da \\

&\ge 0

\end{align*}

Remark 2: Note this proof works for any $f(x, y) = g(|x+y|) - g(|x - y|)$ for non-decreasing $g: \mathbb{R}_{\ge 0} \rightarrow \mathbb{R}$ and general distributions for $X, Y$. For example, we also have the result

\begin{align*}

\sum_{i,j} w_i w_j \log(1 + |x_i - x_j|) \le \sum_{i,j} w_i w_j \log(1 + |x_i + x_j|)

\end{align*}

for weights $w_1, \cdots, w_n \ge 0$.

Remark 3: Completely forgot to answer the original problem. Note that if we choose $g(x) = \sqrt{x}$ and define $X, Y$ to be random variables distributed over $x_1, \cdots, x_n$ with weights $w_1, \cdots, w_n \ge 0$, then we effectively get, for $\mathbf{M} = [M_{ij}]_{ij}$,

\begin{align*}

\mathbf{w}^\intercal \mathbf{M}\mathbf{w} \ge 0

\end{align*}

for $\mathbf{w} \in \mathbb{R}^n_{\ge 0}$; we need $\mathbf{w} \in \mathbb{R}^n$ in order to get genuine positive semidefiniteness. But it appears that if we replace $\mathbb{P}$ with quasiprobability distribution (e.g. negative measures are allowed), everything in the proof still seems to go through.