Background

In computer, the limited float precision due to the storage limit e.g. 64 bit can cause problems. Trying to understand what approaches are available and being used to cope with or overcome the precision limitations.

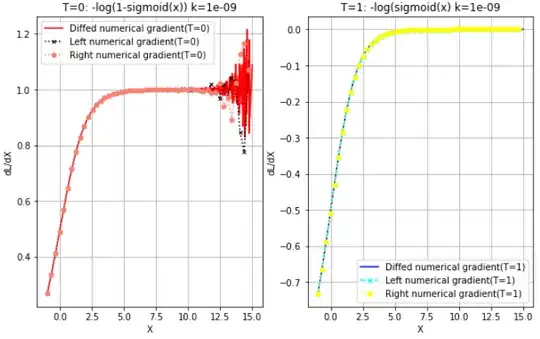

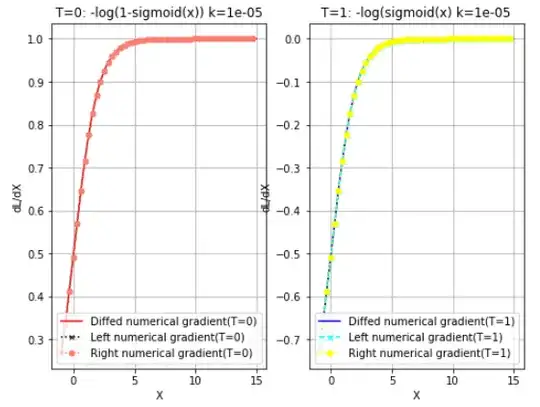

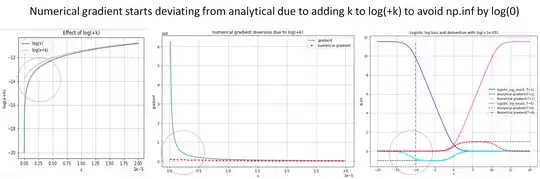

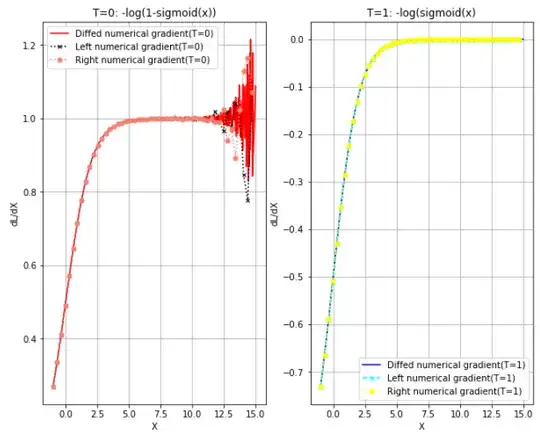

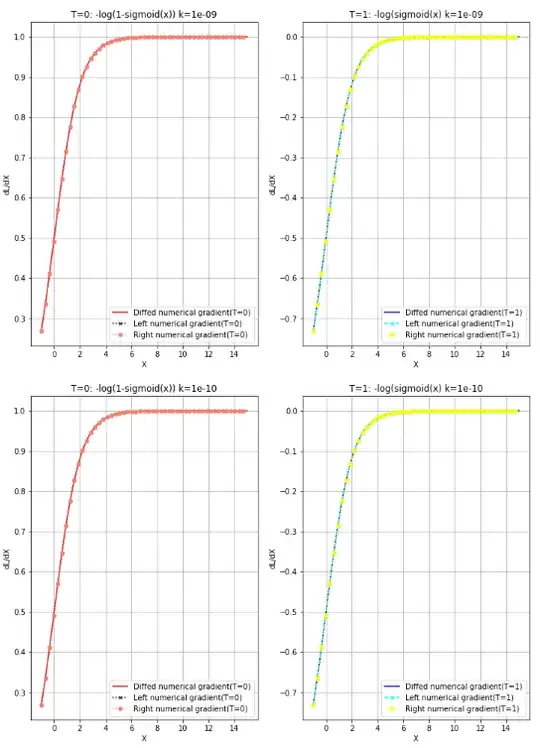

The original issue is numpy - why numerical gradient log(1-sigmoid(x)) diverges but $\log(\operatorname{sigmoid}(x))$ does not?. While trying to implement a numeric gradient (f(x+k)-f(x-k)) / 2k of the logistic log loss function, encountered a problem of float calculation gets unstable. When $f(x)$ and $k$ gets smaller such as 1e-8, $\log(\operatorname{sigmoid}(x))$ started diverging while $\log(\operatorname{sigmoid}(x))$ does not.

When the values are relatively large such as 1e-5, the issue does not happen, at least in the range of x.

The reason trying to make k smaller is to prevent log(0) to become np.inf by adding a small number u e.g. 1e-5 but log(x+1e-5) causes a deviation of numerical gradient from the analytical one. To minimize the impact, I try to make it smallest possible and start having this issue.

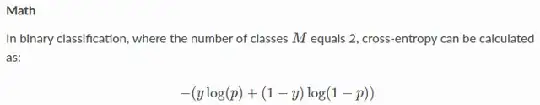

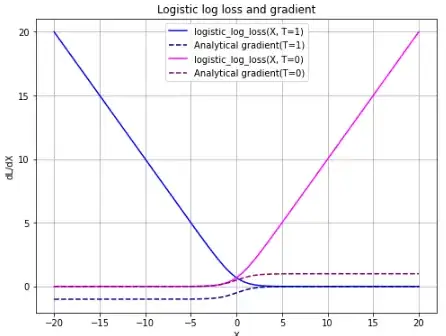

Logistic log loss functions

y in the figure is binary true/false label T and p is the activation sigmoid(x),

Question

I believe there are science and engineering fields which require high precision calculations. I like to understand

- What kind of float numerical calculation problems exist.

- Which mathematics topics or areas to look into.

- What approaches are being used to address limited precision/storage issues in computing.

Code

Logistic log loss and analytical gradient.

import numpy as np

import inspect

from itertools import product

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

def __sigmoid(X):

return 1 / (1 + np.exp(-1 * X))

def __logistic_log_loss(X: np.ndarray, T: np.ndarray):

return -(T * np.log(__sigmoid(X)) + (1-T) * np.log(1-__sigmoid(X)))

def __logistic_log_loss_gradient(X, T):

Z = __sigmoid(X)

return Z-T

N = 1000

left=-20

right=20

X = np.linspace(left,right,N)

T0 = np.zeros(N)

T1 = np.ones(N)

--------------------------------------------------------------------------------

T = 1

--------------------------------------------------------------------------------

fig, ax = plt.subplots(figsize=(8,6))

ax.plot(

X,

__logistic_log_loss(X, T1),

color='blue', linestyle='solid',

label="logistic_log_loss(X, T=1)"

)

ax.plot(

X,

__logistic_log_loss_gradient(X, T1),

color='navy', linestyle='dashed',

label="Analytical gradient(T=1)"

)

--------------------------------------------------------------------------------

T = 0

--------------------------------------------------------------------------------

ax.plot(

X,

__logistic_log_loss(X, T0),

color='magenta', linestyle='solid',

label="logistic_log_loss(X, T=0)"

)

ax.plot(

X,

__logistic_log_loss_gradient(X, T0),

color='purple', linestyle='dashed',

label="Analytical gradient(T=0)"

)

ax.set_xlabel("X")

ax.set_ylabel("dL/dX")

ax.set_title("Logistic log loss and gradient")

ax.legend()

ax.grid(True)

Numerical gradient

def t_0_loss(X):

return [

#logistic_log_loss(P=sigmoid(x), T=0)

-np.log(1.0 - __sigmoid(x)) for x in X

]

def t_1_loss(X):

return [

#logistic_log_loss(P=sigmoid(x), T=1)

-np.log(__sigmoid(x)) for x in X

]

N = 1000

left=-1

right=15

Numerical gradient

(f(x+k)-f(x-k)) / 2k

k = 1e-9

X = np.linspace(left,right,N)

fig, axes = plt.subplots(1, 2, figsize=(10,8))

--------------------------------------------------------------------------------

T = 0

--------------------------------------------------------------------------------

axes[0].plot(

X,

((np.array(t_0_loss(X + k)) - np.array(t_0_loss(X - k))) / (2*k)),

color='red', linestyle='solid',

label="Diffed numerical gradient(T=0)"

)

axes[0].plot(

X[0:-1:20],

((np.array(t_0_loss(X + k)) - np.array(t_0_loss(X))) / k)[0:-1:20],

color='black', linestyle='dotted', marker='x', markersize=4,

label="Left numerical gradient(T=0)"

)

axes[0].plot(

X[0:-1:20],

((np.array(t_0_loss(X)) - np.array(t_0_loss(X - k))) / k)[0:-1:20],

color='salmon', linestyle='dotted', marker='o', markersize=5,

label="Right numerical gradient(T=0)"

)

axes[0].set_xlabel("X")

axes[0].set_ylabel("dL/dX")

axes[0].set_title("T=0: -log(1-sigmoid(x))")

axes[0].legend()

axes[0].grid(True)

--------------------------------------------------------------------------------

T = 1

--------------------------------------------------------------------------------

axes[1].plot(

X,

((np.array(t_1_loss(X + k)) - np.array(t_1_loss(X - k))) / (2*k)),

color='blue', linestyle='solid',

label="Diffed numerical gradient(T=1)"

)

axes[1].plot(

X[0:-1:20],

((np.array(t_1_loss(X + k)) - np.array(t_1_loss(X))) / k)[0:-1:20],

color='cyan', linestyle='dashed', marker='x', markersize=5,

label="Left numerical gradient(T=1)"

)

axes[1].plot(

X[0:-1:20],

((np.array(t_1_loss(X)) - np.array(t_1_loss(X - k))) / k)[0:-1:20],

color='yellow', linestyle='dotted', marker='o', markersize=5,

label="Right numerical gradient(T=1)"

)

axes[1].set_xlabel("X")

axes[1].set_ylabel("dL/dX")

axes[1].set_title("T=1: -log(sigmoid(x)")

axes[1].legend()

axes[1].grid(True)