For simplicity we assume $a>0$ rather than only $a\geq0$.

By Bayes' formula, we may equivalently show that

\begin{align*}

\mathbb{P}(aX + bY > u \, | \, X + Y > t) \geq \mathbb{P}(aX + bY > u).

\end{align*}

Using the tower property in both the numerator and the denominator,

\begin{align*}

\mathbb{P}(aX + bY > u \, | \, X + Y > t)

&=

\dfrac{\mathbb{P}(aX + bY > u, X + Y > t)}{\mathbb{P}(X+Y>t)} \\

&=

\dfrac{\mathbb{E}[\mathbb{P}(aX + bY > u, X + Y > t \, | \, Y)]}{\mathbb{E}[\mathbb{P}(X + Y > t \, | \, Y)]}.

\end{align*}

Similarly,

\begin{align*}

\mathbb{P}(aX + bY > u) = \mathbb{E}[\mathbb{P}(aX + bY > u \, | \, Y)].

\end{align*}

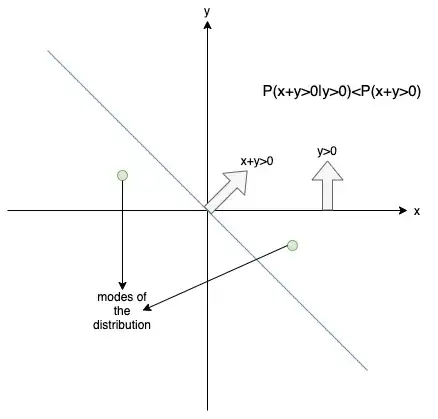

Since $X$ and $Y$ are independent and $a>0$,

\begin{align*}

\mathbb{P}(aX + bY > u, X + Y > t \, | \, Y)

&=

S_X(Y_1 \vee Y_2), \\

\mathbb{P}(X + Y > t \, | \, Y)

&= S_X(Y_2), \\

\mathbb{P}(aX + bY > u \, | \, Y)

&= S_X(Y_1),

\end{align*}

where $S_X(x) := 1 - \mathbb{P}(X \leq x)$ and $x_1 \vee x_2 = \max\{x_1,x_2\}$, while

\begin{align*}

Y_1 = \dfrac{u - bY}{a}, \hspace{5mm} Y_2 = t-Y.

\end{align*}

Note that $Y_1$ and $Y_2$ are functions of $Y$, and thus $S_X(Y_1 \vee Y_2)$, $S_X(Y_2)$, and $S_X(Y_1)$ are also random variables (more precisely, transformations of $Y$).

Collecting terms, we want to show that

\begin{align*}

\dfrac{\mathbb{E}[S_X(Y_1 \vee Y_2)]}{\mathbb{E}[S_X(Y_1)]\mathbb{E}[S_X(Y_2)]} \geq 1.

\end{align*}

Now obviously, $S_X(Y_1 \vee Y_2) \geq S_X(Y_1)S_X(Y_2)$. So it suffices to show that

\begin{align*}

\text{Cov}[S_X(Y_1),S_X(Y_2)] = \mathbb{E}[S_X(Y_1)S_X(Y_2)] - \mathbb{E}[S_X(Y_1)]\mathbb{E}[S_X(Y_2)] \geq 0.

\end{align*}

Note that $S_X(Y_1)$ is a non-decreasing function of $Y$ since $Y_1$ is a non-increasing as a function of $Y$ (recall $b\geq0$) and $S_X$ is a survival function and thus non-increasing. Similarly, $S_X(Y_2)$ is a non-decreasing function of $Y$. Consequently, see e.g. this question, we must have $\text{Cov}[S_X(Y_1),S_X(Y_2)] \geq 0$ as desired.