The geometric mean is the exponential of the arithmetic mean of a log-transformed sample. In particular,

$$\log\left( \biggl(\prod_{i=1}^n x_i\biggr)^{\!1/n}\right) = \frac{1}{n} \sum_{i=1}^n \log x_i,$$

for $x_1, \ldots, x_n > 0$.

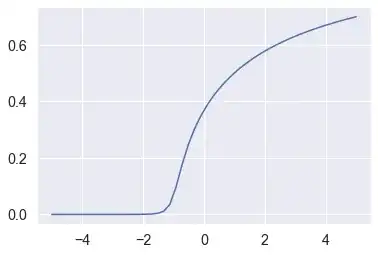

So this should provide some intuition as to why the geometric mean is insensitive to right outliers, because the logarithm is a very slowly increasing function for $x > 1$.

But what about when $0 < x < 1$? Doesn't the steepness of the logarithm in this interval suggest that the geometric mean is sensitive to very small positive values--i.e., left outliers? Indeed this is true.

If your sample is $(0.001, 5, 10, 15),$ then your geometric mean is $0.930605$ and your arithmetic mean is $7.50025$. But if you replace $0.001$ with $0.000001$, this barely changes the arithmetic mean, but your geometric mean becomes $0.165488$. So the notion that the geometric mean is insensitive to outliers is not entirely precise.