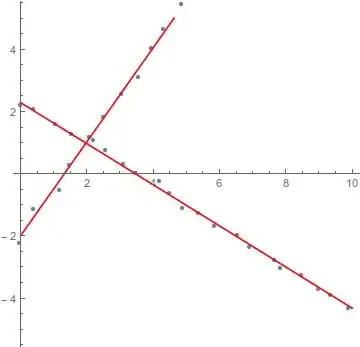

I have the convex hull of the corner of a building in 2D and I am trying to fit 2 perpendicular lines to the set of points on that hull to get me the orientation of the corner. I have a solution but its not useful for cases where one of the lines passes through the origin. Please let me know if any of the derivations aren't clear, I have only included the key results for the sake of being concise but can add more.

Say I have 2 sets of 2D points $\mathcal{X}_1$ and $\mathcal{X}_2$ belonging to line $l_1: \mathbf{x}^t\mathbf{n}=1$ and $l_2: \mathbf{x}^t\mathbf{Rn}/\alpha=1$ where $\mathbf{R}$ is a 90 degrees rotation matrix. This means that the distance of the line from the origin is given by one over the magnitude of $\mathbf{n}$.

Solution 1:

I can formulate a cost function:

$C = ||\mathcal{X}_1\mathbf{n}-\mathbf{1}_1||_2^2+||\mathcal{X}_2\mathbf{Rn}/\alpha - \mathbf{1}_2||_2^2$

where $\mathbf{1}_i$ is a vector of 1s with length equal to the number of points in set $i$

I can solve this equation easily giving:

$\mathbf{n} = \mathcal{X}_1^+\mathbf{1}_1$

$\alpha = ||\mathcal{X}_2\mathbf{Rn}||_1/N_2$

where $N_2$ is the number of points in set 2.

Firstly, I find it curious that the solution for $\mathbf{n}$ does not depend on any of the points in set 2, but also as mentioned, for a line that passes through the origin the answer is difficult to compute.

Solution 2

Instead, if I formulate the lines as $l_1: \mathbf{x}^t\mathbf{n}=d_1$ and $l_2: \mathbf{x}^t\mathbf{Rn}=d_2$ but this time constrain $\mathbf{n}$ to be a unit vector I can write my cost function using a lagrange multiplier to keep the constraint:

$C = ||\mathcal{X}_1\mathbf{n}-\mathbf{d}_1||_2^2+||\mathcal{X}_2\mathbf{Rn} - \mathbf{d}_2||_2^2 + \lambda(\mathbf{n^Tn}-1)$

This solution I am having trouble getting to a solution for - perhaps I am missing a constraint? Anyway if I differentiate with respect to $\mathbf{n}$ and set to 0 I get the following:

$(\mathcal{X}_1^T\mathcal{X}_1 + \mathbf{R}^T\mathcal{X}_2^T\mathcal{X_2}\mathbf{R} + \lambda\mathbf{I})\mathbf{n}=\mathcal{X}_1\mathbf{d}_1 + \mathbf{R}^T\mathcal{X}_2^T\mathbf{d}_2$

differentiating with respect to $\mathbf{d}_1$ and setting to 0 (noting that $\mathbf{d}_i=d_i\mathbf{1}_i$ and hence differentiating with respect to either the vector or scalar version and setting to 0 is equivalent)

I get $\mathbf{d}_1 = \mathcal{X}_1\mathbf{n}$ and similarly $\mathbf{d}_2 = \mathcal{X}_2\mathbf{Rn}$. First off this seems odd as it is an exact solution assuming no noise, but also when I substitute this to above everything cancels to give me $\lambda\mathbf{n}=0$ and I have no idea where to go from here.

Any help in how to get solution 2 making use of Lagrange multipliers would be grand - I have tried looking at formulating the problem in homogenous coordinates and am in the process of seeing whether that would work, but I a confused as to why the above gives no clear solution (or where my errors might be).

EDIT:

As requested in the comments here is a link to 4 different sets of sample data

For context, the purpose of this task is that I have some 3D data of the corner of a building and I am trying to work out the corner planes to align it with a coordinate axis. I have aligned the ground plane with a principal axis and then collapsed the points into 2D (effectively getting a plan view). Finally, to get the data presented I kept taking convex hulls until I had at least 50 points with a certain separation.

The data is unlabelled, and the process assigns points to a line based on proximity, although arguably a better classification scheme is likely possible since I know that it will be a corner.

EDIT 2

To clarify my question isn't how to find a solution to the problem - I already have one, my question is why solution 2 using Lagrange multipliers doesn't lead to a straightforward solution despite the almost trivial difference in formulation between Solution 1 and Solution 2.