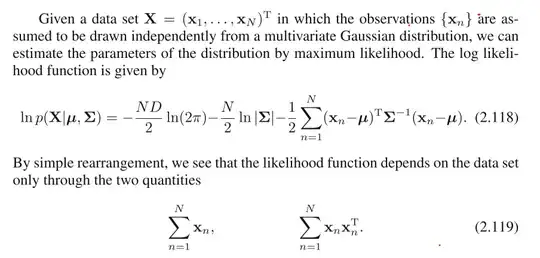

I've shown something similar for the 1-dimensional case. That $\Sigma x_n$ is the sufficient statistic of the gaussian mean and $\Sigma x_n$,$\Sigma x_n^2$ are the sufficient statistics of the gaussian variance.

However, im stuck on the multivariate example. How did we show that the log likelihood depends on the data only through $\Sigma x_n$ and $\Sigma x_n^Tx_n$?

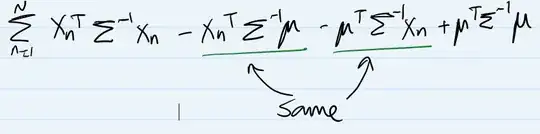

I suppose we only look at the exponent terms and i made the exponent into:

How to move the $x_n^T$ and $x_n$ together when the inverse variance is in between them?