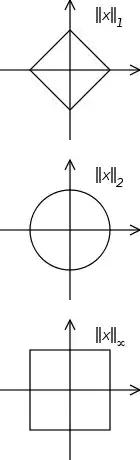

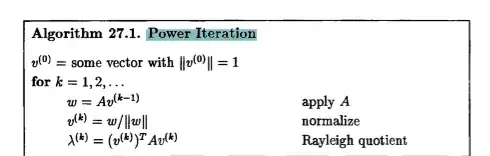

Why we use the 2-norm all over the place instead of the 1-norm although both are equivalent and the 1-norm is more effieciently computable? I am currently implementing an algorithm that normalizes a vector in each step (using 2-norm). So i could also just use the 1-norm instead? More details: power iteration for the calculation of the dominat eigenvalue of a matrix:

x:=random vector of length numrows(A) x=x/||x||

while l changes (e.g. abs(l-l_last)<tolerance)

x=Av

l=||x||

x=x/||x||