Let $(X_1, X_2)$ be uniform over the unit Sierpinski triangle (represented in Cartesian coordinates). What is its covariance matrix?

This is a question I saw in a jobs ad. I would love some leads on solving it.

Let $(X_1, X_2)$ be uniform over the unit Sierpinski triangle (represented in Cartesian coordinates). What is its covariance matrix?

This is a question I saw in a jobs ad. I would love some leads on solving it.

For this answer, I will interpret the "unit Sierpinski triangle $S$" as being the one with vertices $(0,0)$, $(1,0)$, $(0,1)$. If you want to use the triangle with vertices $(0,0)$, $(1,0)$, $(\frac{1}{2}, \frac{\sqrt{3}}{2})$ instead (i.e. beginning with an equilateral triangle) then a very similar method should work.

I will also interpret the "uniformity" condition on the probability measure $\mu$ on $S$ as meaning that the contraction of $S$ to each of its subtriangles of level 1, $S_{00}$, $S_{01}$, and $S_{10}$, respects measure except that it multiplies it by $\frac{1}{3}$.

Now, this will imply that for any measurable and $L^1$ function $f : S \to \mathbb{R}$, we have $$ \int_{S_{00}} f(x,y) d\mu = \frac{1}{3} \int_S f \left(\frac{1}{2}x, \frac{1}{2}y \right) d\mu; \\ \int_{S_{01}} f(x,y) d\mu = \frac{1}{3} \int_S f \left(\frac{1}{2} x, \frac{1}{2}y + \frac{1}{2} \right); \\ \int_{S_{10}} f(x,y) d\mu = \frac{1}{3} \int_S f \left(\frac{1}{2} x + \frac{1}{2}, \frac{1}{2} y \right).$$ The reason: it must hold for any characteristic function of a set by the uniformity condition; then, by linearity it must hold for simple functions; then, the definitions of Lebesgue integrals for nonnegative functions and for $L^1$ functions will extend the equations to all $L^1$ functions.

We can also show that the function $x : S \to \mathbb{R}$ must be measurable: for any dyadic rational $q$, $x^{-1}([q, \infty)) = \{ (x, y) \in S \mid x \ge q \}$ is a finite union of subtriangles of $S$ and therefore measurable. However, the intervals $[q, \infty)$ with $q$ a dyadic rational generate the Borel $\sigma$-algebra on $\mathbb{R}$. Similarly, the function $y : S \to \mathbb{R}$ must be measurable. And then, since both $x$ and $y$ are bounded functions on $S$ and $\mu$ is a finite measure, it follows that they are also in $L^1(\mu)$.

Using this, we can now calculate: $$E(x) = \int_S x\,d\mu = \int_{S_{00}} x\,d\mu + \int_{S_{01}} x\,d\mu + \int_{S_{10}} x\,d\mu = \\ \frac{1}{3} \int_S \frac{1}{2}x\,d\mu + \frac{1}{3} \int_S \frac{1}{2}x\,du + \frac{1}{3} \int_S \left( \frac{1}{2}x + \frac{1}{2} \right) d\mu = \\ \frac{1}{2} \int_S x\,d\mu + \frac{1}{6} = \frac{1}{2} E(x) + \frac{1}{6}.$$ It follows that $E(x) = \frac{1}{3}$. Very similarly, $E(y) = \frac{1}{3}$.

The calculation of $E(x^2), E(xy), E(y^2)$ will be very similar (using the previous results for $E(x)$ and $E(y)$ in intermediate steps).

As mentioned by @MarkMcClure in the comments (and the wonderful linked answer), both the numerical and exact answer of points sampled from the Sierpinski triangle seems to be $(1/18) \mathbf{I}_2$ when you consider the points as N-dimensional samples $X=(x_1, x_2, \ldots, x_N)^T$. If however, you consider the transpose of that $Y = X^T$ you get the answer below. While not correct, it was fun to work through and I'll leave it up as long as people still think it has some value.

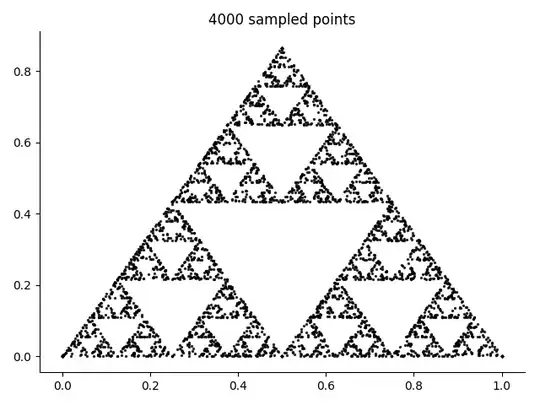

Not directly an answer, but there are simple direct insights you can make into the problem by sampling. First, sample a few thousand points using the chaos game.

Compute the covariance matrix $C = \textrm{Cov}(X_1,X_2)$ and then the eigenvectors, $Cv = \lambda v$. You'll quickly find that the largest eigenvalue dominates all of the rest. In this sample $\lambda_1 / \lambda_2 \approx 10^{15}$. Sort $C$ by this eigenvector $v_1$ and you get a beautiful and smooth matrix:

For the final visual, color the points by this $v_1$

All of this suggests that the answer to the original question has a nice closed form.