Let $X$ and $Y$ be operators on a real or complex Hilbert space $\mathcal{H}$ and $f(\alpha) = \|X - \alpha Y\|_2$ where $\alpha$ is real and $\|A\|_2 = \sigma_{\mathsf{max}}(A)$ is the $\ell^2$-induced operator norm. What is $\frac{df}{d\alpha}$?

Even if the function is not differentiable everywhere, $f$ is convex in which case a sub-gradient will suffice.

Also, if it helps we can assume $X=I$ and $Y$ is positive definite but I'd rather see a more general result. Also considering $f^2$ instead of $f$ is also fine if that helps.

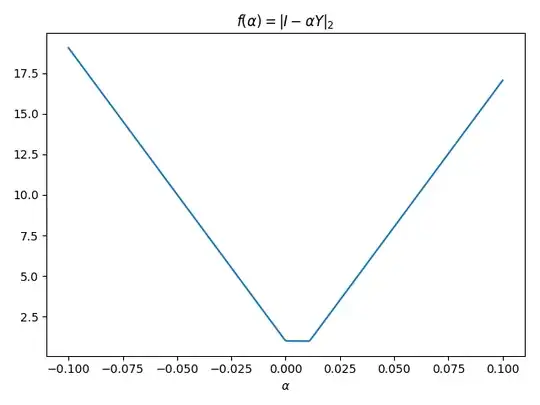

Plots of $f$: I ran two simple numerical examples which might be enlightening. In the following plot 1, $X = I\in M_{50}(\mathbb{R})$ and $Y = Z^\mathsf{T}Z + I$ where $Z_{ij}\sim\mathcal{N}(0,1)$ is normally distributed. As we can see, the plot seems piecewise linear.

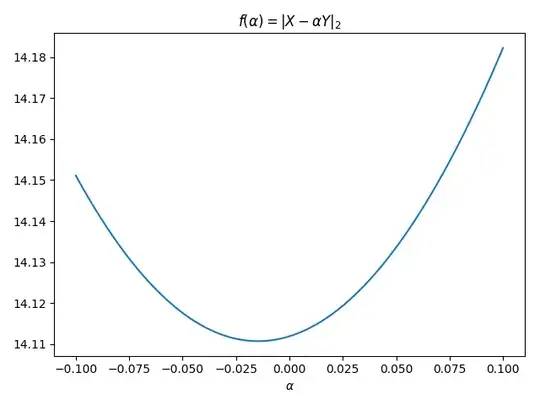

In the next plot 2, we take $X_{ij}\sim\mathcal{N}(0,1) - I \in M_{50}(\mathbb{R})$ and $Y_{ij}\sim\mathcal{N}(0,1)$. Note that neither $X$ nor $Y$ are symmetric. This example looks differentiable and practically quadratic.