I tested the Rabin-Miller pseudo prime algorithm using a single test value and found that the number of false calls depends on the size of the number to test, reducing to a (conjectured) negligible probability of error for very large numbers.

A number is randomly chosen to be tested. If the number is $0,1,2,3$ or even the correct result is returned. If the number is odd and $>3$ the Rabin-Miller algorithm specified below is used. The result is compared with table values. This algorithm use a random number $a$ for the test. It would never miss a prime but occasionally report a composite to be a prime. If the test is erroneous an error is returned. I used the method at oeis A014233 where a method for exact testing of primes using multiple Rabin-Miller tests is described, together with squeezed prime tables, to assess the truth of primality.

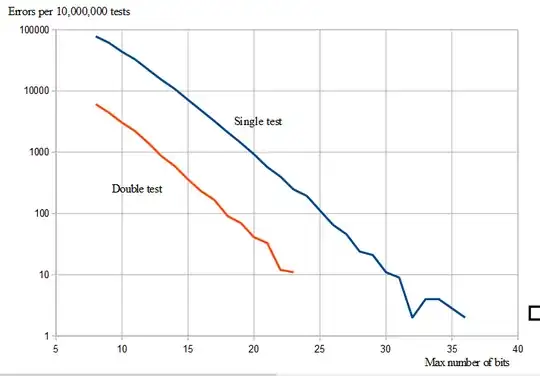

For each $b$ I ran the test $10,000,000$ times with pseudo random numbers less than $2^b$ and then got the following number of errors $e$:

e b f

78144 8 78125

61425 9 55243

43642 10 39063

32688 11 27621

22201 12 19531

15190 13 13811

10789 14 9766

7176 15 6905

4814 16 4883

3227 17 3453

2122 18 2441

1422 19 1726

926 20 1221

574 21 863

400 22 610

247 23 432

194 24 305

112 25 216

65 26 153

46 27 108

24 28 76

21 29 54

11 30 38

9 31 27

2 32 19

4 33 13

4 34 10

0 35 7

2 36 5

0 37 3

0 38 2

0 39 2

0 40 1

0 41 1

The f-column is the function $f(b)=1250000\cdot 2^{-\frac{b}{2}}$.

The algorithm used is:

To test an odd number $n$, write $n=2^r\cdot s+1$, where $s$ is odd.

Given a random number $a$ such that $1<a<n$, if

1. $a^s\equiv 1(\text{mod}\; n)$ or

2. it exists an integer $j$: $0\le j<r$ with $a^{2^j\cdot s}\equiv -1(\text{mod}\; n)$

then $n$ is pseudo prime.

The conjecture is:

For $b$ big enough $\text{e}<1250000\cdot 2^{-\frac{b}{2}}$.

And specially, $\text{e}\sim 2^{-\frac{b}{2}}$.

I intend to use the algorithm for testing big integers and it seems like just one test is enough for $b>256$ since $f(256)=\frac{1}{272225893536750770770699685945414}\,$, which is supposed to be the probability for an error within 10,000,000 random primary tests. For $b<<256$ one can use multiple tests or an exact algorithm.

But how to prove the conjecture or at least make it resonable?

I will also reward an indepentent computer test, that support or not support my own computer test.

I made a test with double check of Rabin-Miller and made a diagram: