From what I remember from a lecture I had of a course I'm attending called "introduction to computational science", floating-point numbers are distributed logarithmically. What does it mean? And how can I visualize it?

I've a slide where it is said:

We assume that all binary numbers are normalized. Between powers of 2, the floating point numbers are equidistant.

I think this is related to the logarithmic distribution or spacing of the floating point numbers, but I don't understand exactly what it means.

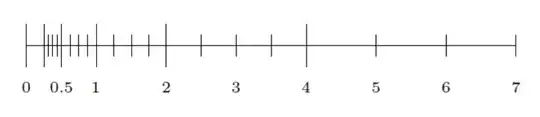

I've also below the statement taken from the same slide this picture:

Apart from the range between $0$ and $0.25$, it seems that the number or density of floating point numbers is decreasing, if this picture actually represents the distribution of floating-point numbers.

Why is that?

Why there's this exception between $0$ and $0.25$?