Information entropy is usually defined as

$$\text{I}_b({\bf p}) = -\sum_{\forall i}p_i\log_b(p_i)$$ i.e. the expected value of the negative logarithm of the probabilities.

This is all good when we have a finite set of outcomes i. This can also be estimated using a histogram, treating all values within each bin as the same outcome. Doing this will be possible if we are sampling from a continuous distribution and storing the outcome as floating point numbers. However the estimate we get will be dependent on how we create our histogram bins. It would be nice to get an estimate which is not dependent on how to build histogram bins but still gives a correct estimate for at least some important special cases.

Which methods or definitions do you think would be suitable to do this?

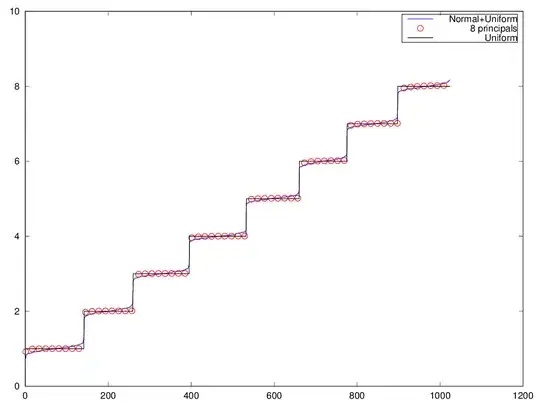

Update ("own work"): Consider the random variable $$X = \mathcal{N}(0,0.071) + U(1,n)\,\,\, \text{where the uniform}\,\,\, U(a,b) \in \{a,\cdots,b\}$$, and $\mathcal{N}(\mu,\sigma)$ is the normal distribution with mean $\mu$ and standard deviation $\sigma$.

Now we calculate some kind of a "similarity" or "adjacency" metric between all pair of samples as a monotonically decreasing function of some distance between the samples. In our example we experiment with $${\bf A}_{ij} = \exp\left[{-\frac{|x_i-x_j|^3}{s^3}}\right]$$ for some values of $s$.

Then we calculate the 8 largest eigenvalues of $\bf A$. These eigenvalues become very close to the number of samples in each Uniform bin.

$$\left[\begin{array}{l|llllllll}\text{eig}({\bf A})& 143.65&140.02&131.85&128.17&123.64&118.61&114.26&111.50\\f& 145&142&133&130&125&120&116&113 \end{array}\right] $$

If we normalize these (so they sum to 1) and calculate entropy, we get: $$\text{I}_2(\text{eig}{\bf A}) = 2.9946 \hspace{1cm} \text{I}_2(p) = 2.9948$$ Which both are very close to the theoretical entropy of 3 bits with 8 equiprobable states.

Could this maybe be used somehow?

Below is a picture of sorted simulation (1024 samples) and the projection onto the 8 principal components: