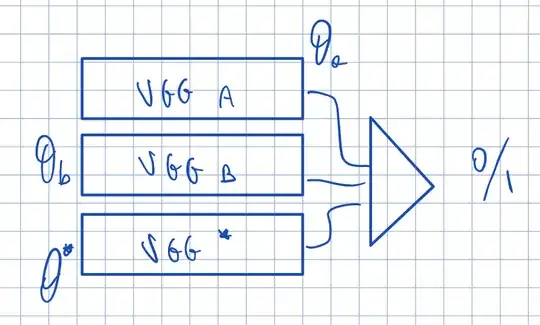

I would like to create 3 different VGGs with a shared classifier. Basically, each of these architectures has only the convolutions, and then I combine all the nets, with a classifier.

For a better explanation, let’s see this image:

I have no idea on how to do this in Pytorch. Do you have any examples that can I study? Is this a case of weights sharing?

Edit: my actual code. Do you think is correct?

class VGGBlock(nn.Module):

def __init__(self, in_channels, out_channels,batch_norm=False):

super(VGGBlock,self).__init__()

conv2_params = {'kernel_size': (3, 3),

'stride' : (1, 1),

'padding' : 1

}

noop = lambda x : x

self._batch_norm = batch_norm

self.conv1 = nn.Conv2d(in_channels=in_channels,out_channels=out_channels , **conv2_params)

self.bn1 = nn.BatchNorm2d(out_channels) if batch_norm else noop

self.conv2 = nn.Conv2d(in_channels=out_channels,out_channels=out_channels, **conv2_params)

self.bn2 = nn.BatchNorm2d(out_channels) if batch_norm else noop

self.max_pooling = nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))

@property

def batch_norm(self):

return self._batch_norm

def forward(self,x):

x = self.conv1(x)

x = self.bn1(x)

x = F.relu(x)

x = self.conv2(x)

x = self.bn2(x)

x = F.relu(x)

x = self.max_pooling(x)

return x

class VGG16(nn.Module):

def __init__(self, input_size, num_classes=1,batch_norm=False):

super(VGG16, self).__init__()

self.in_channels,self.in_width,self.in_height = input_size

self.block_1 = VGGBlock(self.in_channels,64,batch_norm=batch_norm)

self.block_2 = VGGBlock(64, 128,batch_norm=batch_norm)

self.block_3 = VGGBlock(128, 256,batch_norm=batch_norm)

self.block_4 = VGGBlock(256,512,batch_norm=batch_norm)

@property

def input_size(self):

return self.in_channels,self.in_width,self.in_height

def forward(self, x):

x = self.block_1(x)

x = self.block_2(x)

x = self.block_3(x)

x = self.block_4(x)

x = torch.flatten(x,1)

return x

class VGG16Classifier(nn.Module):

def __init__(self, num_classes=1,classifier = None,batch_norm=False):

super(VGG16Classifier, self).__init__()

self._vgg_a = VGG16((1,32,32),batch_norm=True)

self._vgg_b = VGG16((1,32,32),batch_norm=True)

self._vgg_star = VGG16((1,32,32),batch_norm=True)

self.classifier = classifier

if (self.classifier is None):

self.classifier = nn.Sequential(

nn.Linear(2048, 2048),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(2048, 512),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(512, num_classes)

)

def forward(self, x1,x2,x3):

op1 = self._vgg_a(x1)

op2 = self._vgg_b(x2)

op3 = self._vgg_star(x3)

x1 = self.classifier(op1)

x2 = self.classifier(op2)

x3 = self.classifier(op3)

return x1,x2,x3

return xc

model1 = VGG16((1,32,32),batch_norm=True)

model2 = VGG16((1,32,32),batch_norm=True)

model_star = VGG16((1,32,32),batch_norm=True)

model_combo = VGG16Classifier(model1,model2,model_star)

EDIT: I changed the forward of VGG16Classifier, because previously I took the output of the 3 VGG, I made a concat, and I passed to a classifier. Instead, now we have the same classifier for each VGG.

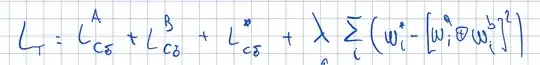

Now, my question is, I want to implement this loss:

And here is my attempt of implementation:

And here is my attempt of implementation:

class CombinedLoss(nn.Module):

def __init__(self, loss_a, loss_b, loss_star, _lambda=1.0):

super().__init__()

self.loss_a = loss_a

self.loss_b = loss_b

self.loss_star = loss_star

self.register_buffer('_lambda',torch.tensor(float(_lambda),dtype=torch.float32))

def forward(self,y_hat,y):

return (self.loss_a(y_hat[0],y[0]) +

self.loss_b(y_hat[1],y[1]) +

self.loss_combo(y_hat[2],y[2]) +

self._lambda * torch.sum(model_star.weight - torch.pow(torch.cdist(model1.weight+model2.weight), 2)))

Probably the part of lamba*sum is wrong, however, my question is, in this way, I have to split my dataset in 3 parts to obtain y[0], y1 and y2, right? If is not possible to ask in this post, I will create a new question.