I'm in trouble creating a heatmap using a CSV file.

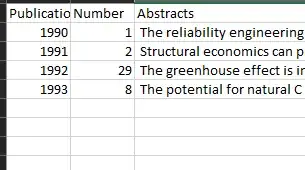

csv data is in a format like below

here is a code

years = np.array(datadf.PublicationYear)

sns.set(font_scale=2)

wordlist = ['greenhouse_gas', 'pollution', 'resilience', 'urban','city', 'environmental_impacts', 'climate_change',

'adaptation','mitigation','carbon', 'ghg_emissions','sustainable','sustainability','lca']

word_tuples = [('urban','city','urban'), ('greenhouse_gas','ghg_emissions','greenhouse_gas'),('sustainable','sustainability','sustainability')]

use_wordlist = True

word_number = 30

freqdata = []

agg_keys = []

for i in np.arange(len(abstrct)):

ngram_model = Word2Vec(ngram[[abstrct[i]]], size=100, min_count=1)

ngram_model_counter = Counter()

for key in ngram_model.wv.vocab.keys():

if key not in stoplist:

if use_wordlist:

if key in wordlist:

if len(key.split("_")) > N:

ngram_model_counter[key] += ngram_model.wv.vocab[key].count

else:

if len(key.split("_")) > N:

ngram_model_counter[key] += ngram_model.wv.vocab[key].count

freqdf = pd.DataFrame(ngram_model_counter.most_common(word_number))

if len(freqdf.index) == 0:

freqdf[0] = wordlist

freqdf[1] = 0

for w in word_tuples:

if w[0] in wordlist and w[1] in wordlist:

f = 0

drops_w = []

for j in np.arange(len(freqdf.index)):

if freqdf.iloc[j][0] == w[0] or freqdf.iloc[j][0] == w[1]:

f += freqdf.iloc[j][1]

drops_w.append(j)

freqdf = freqdf.drop(drops_w, axis = 0)

append_data = pd.DataFrame({0:[w[2]],1:[f]})

freqdf = freqdf.append(append_data,ignore_index=True)

freqdf = freqdf.reset_index(drop=True)

#Normalizing the frequency by the total number of non-stopword tokens

freqdf['prob'] = freqdf[1]/(len(abstrct[i]))

agg_keys += np.array(freqdf[0]).tolist()

freqdata.append(freqdf)

unqkeys = np.unique(np.array(agg_keys))

matrix = np.zeros([unqkeys.size,len(freqdata)])

for i in np.arange(years.size):

for j in np.arange(unqkeys.size):

for k in np.arange(len(freqdata[i].index)):

if freqdata[i].iloc[k][0] == unqkeys[j]:

matrix[j,i] = freqdata[i].iloc[k]['prob']

fig, ax = plt.subplots(figsize = (40, 26))

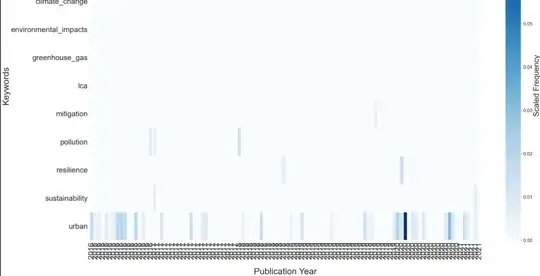

ax = sns.heatmap(matrix, annot = False,linewidths = .9,cmap = 'Blues' ,cbar_kws={'label': 'Scaled Frequency'})

ax.figure.axes[-1].yaxis.label.set_size(35)

ax.grid(False)

ax.set_yticks(np.arange(len(unqkeys))+0.5) #Adding 0.5 offset

ax.set_xticks(np.arange(len(years))+0.5)

ax.set_yticklabels(unqkeys,rotation= 0, fontsize = 34.0)

ax.set_xticklabels(years,rotation='vertical', fontsize = 35.0)

ax.set_xlabel('Publication Year', fontsize = 40.0, labelpad = 40)

ax.set_ylabel('Keywords',fontsize = 40.0, labelpad = 5)

plt.show()

image_path = os.path.join(base_dir, 'plots/') + data_dir + '-heatmap.jpg'

fig.savefig(image_path)

It generates a graph below. How could it make x axis like [2016,2017,2018,2019,2020,2021]