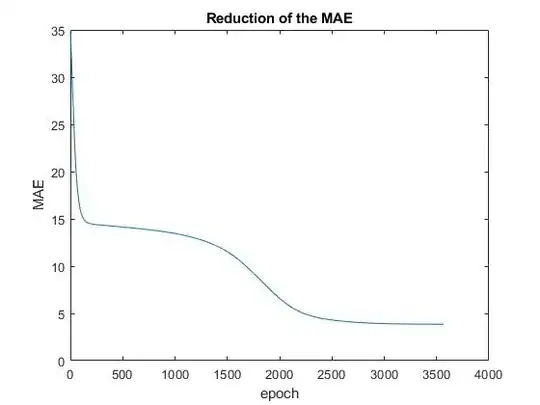

This may seem to be a silly question. But I just wonder why the MAE doesn't reduce to values close to 0.

It's the result of an MLP with 2 hidden layers and 6 neurons per hidden layer, trying to estimate one outputvalue depending on three input values.

Why is the NN (simple feedforward and backprop, nothing special) not able to maybe even overfit and meet the desired training values?

Costfunction = $0.5 (Target - Modeloutput)^2$

EDIT:

Indeed I found an inconsistency in the inputdata.

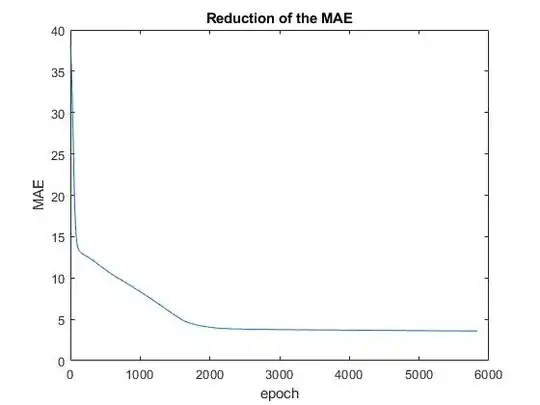

Already cheering, I was hoping to see a better result, after fixing the issue with the input data. But what I got is this:

I'm using a Minibatch-SGD and now I think it might get trapped in a local minimum. I read about Levenberg-Marquardt algorithm, which is said to be stable and fast. Is it a better algorithm for global minimum detection?