I have this script:

import requests

from requests import get

from bs4 import BeautifulSoup

import csv

import pandas as pd

f = open('olanda.csv', 'wb')

writer = csv.writer(f)

url = ('https://www......')

response = get(url)

soup = BeautifulSoup(response.text, 'html.parser')

type(soup)

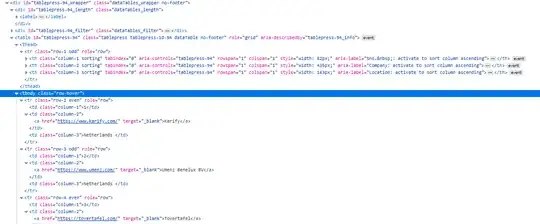

table = soup.find('table', id='tablepress-94').text.strip()

print(table)

writer.writerow(table.split(), delimiter = ',')

f.close()

When it writes to a CSV file it writes everything in a single cell like that:

Sno.,CompanyLocation,1KarifyNetherlands,2Umenz,Benelux,BVNetherlands,3TovertafelNetherlands,4Behandeling,BegrepenNetherlands,5MEXTRANetherlands,6Sleep.aiNetherlands,7OWiseNetherlands,8Healthy,WorkersNetherlands,9&thijs,|,thuis,in,jouw,situatieNetherlands,10HerculesNetherlands, etc.

I wanted to have the output in a single column and each value (separated by comma) in a single row.

I tried to use delimiter = ',' but I got:

TypeError: a bytes-like object is required, not 'str'.

How can I do this? Thanks!