My question is somehow similar to this question, but not satisfied with the answer. I have 100 samples, each sample consists of 6 time-series, (let's say [X1, X2, X3, X4, X5, Y]) of length 200. Each sample is independent of each other. I want to build a time-series model, which will take one sample (300, 5) as input and predict Y (300, 1) as output.

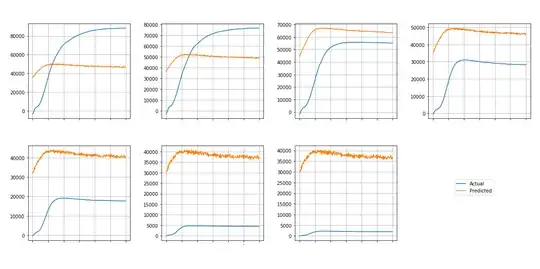

LSTM relates the various windows (in my case sample) while making the model, which is undesirable in my case. Though I tried I tried it and but could not achieve good results. This is the actual and predicted value of some of the samples. We observe that, prediction is happening in a narrow band between 35000 to 50000 for most of the cases. My intuition is LSTM has learnt only the latest sample passed and making prediction based on that.  Further more, I thought of using ARIMAX, but same problem will arise there as well. I tried CNN as well which was predicting better than LSTM but not close to actual values.

Further more, I thought of using ARIMAX, but same problem will arise there as well. I tried CNN as well which was predicting better than LSTM but not close to actual values.

So my question is: what will be best strategy to train such model where it takes a 2D time series data as input, learn the temporal dependency of the X and Y and predict time series sequence of Y.