I am working on a clustering problem. I have 11 features. My complete data frame has 70-80% zeros. The data had outliers that I capped at 0.5 and 0.95 percentile. However, I tried k-means (python) on data and received a very unusual cluster that looks like a cuboid. I am not sure if this result is really a cluster or has something gone wrong?

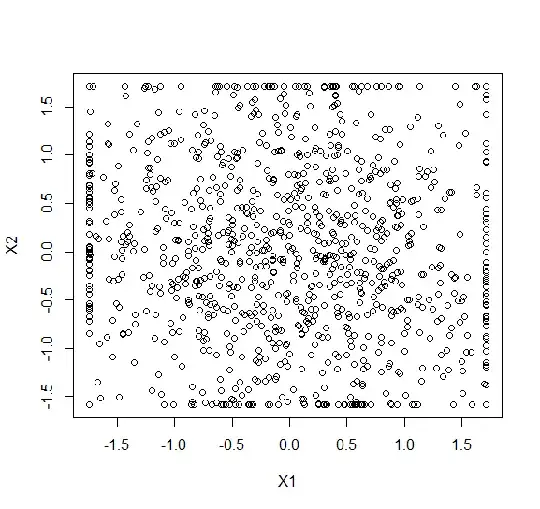

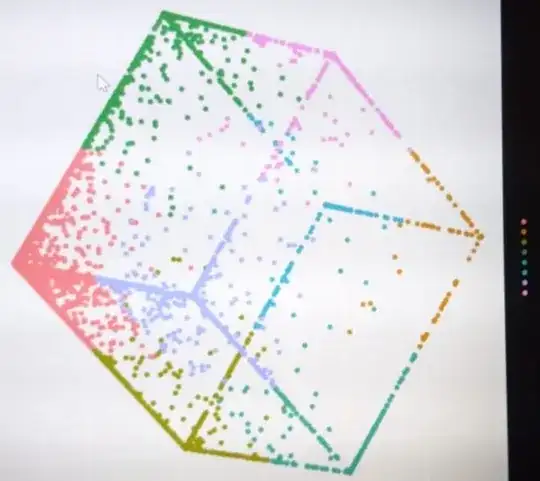

I am working on a clustering problem. I have 11 features. My complete data frame has 70-80% zeros. The data had outliers that I capped at 0.5 and 0.95 percentile. However, I tried k-means (python) on data and received a very unusual cluster that looks like a cuboid. I am not sure if this result is really a cluster or has something gone wrong?

The main reason for my worry, why is it looking like a cuboid and why are the axes orthogonal?

one thing to notice is that: I first reduced the dimensionality using PCA to two dimensions and performed clustering on the same and the plot here is on the 2-dim PCA data

Edit : I chose k using silhouette index in python.