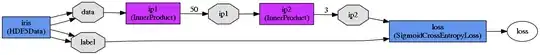

I wonder how to do multitask learning using Caffe. Should I simply use the output layer SigmoidCrossEntropyLoss or EuclideanLoss, and define more than one outputs?

E.g. is the following architecture valid (3 outputs, i.e. 3 tasks concurrently learnt)?

Corresponding prototxt file:

name: "IrisNet"

layer {

name: "iris"

type: "HDF5Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

hdf5_data_param {

source: "iris_train_data.txt"

batch_size: 1

}

}

layer {

name: "iris"

type: "HDF5Data"

top: "data"

top: "label"

include {

phase: TEST

}

hdf5_data_param {

source: "iris_test_data.txt"

batch_size: 1

}

}

layer {

name: "ip1"

type: "InnerProduct"

bottom: "data"

top: "ip1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 50

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "ip2"

type: "InnerProduct"

bottom: "ip1"

top: "ip2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "loss"

type: "SigmoidCrossEntropyLoss"

# type: "EuclideanLoss"

# type: "HingeLoss"

bottom: "ip2"

bottom: "label"

top: "loss"

}