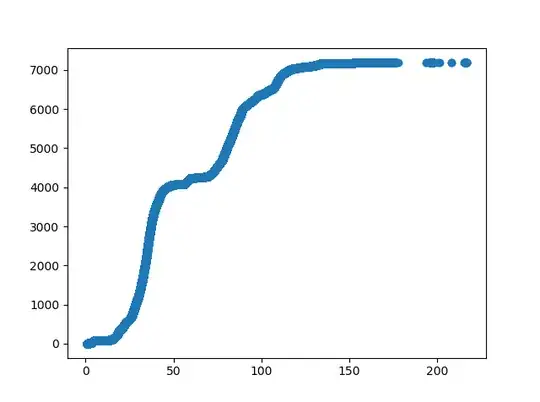

I am currently creating a neural network to learn a function of the following form Data that I want to learn x corresponds to x axis and y to y axis(one dependent and one independent variable)

I am using both keras and tensorflow and with both scripts I get the following result

I am currently creating a neural network to learn a function of the following form Data that I want to learn x corresponds to x axis and y to y axis(one dependent and one independent variable)

I am currently creating a neural network to learn a function of the following form Data that I want to learn x corresponds to x axis and y to y axis(one dependent and one independent variable)

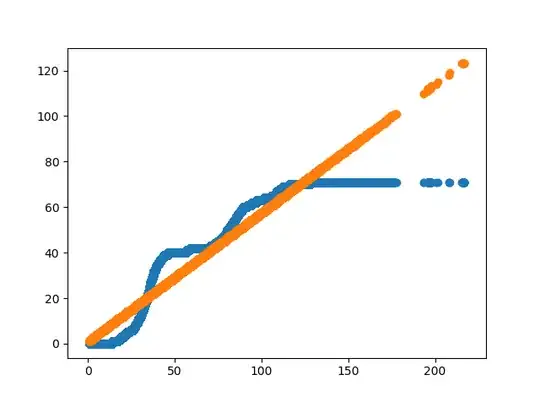

I am using both keras and tensorflow and with both scripts I get the following result Predictions orange line Data blue line. Somehow my neural network doesn't capture the non-linearity of the data and only tries to fit a linear function. Do you maybe have a suggestion what I am doing wrong? Also is the architecture appropriate for the following task or there exists some problems.

Additionally as an information I also include a snippet of the architecture that I am using in keras

def individual_model(keys, labels, config):

model = Sequential()

model.add(Dense(32, input_dim=1))

model.add(LeakyReLU())

for i in range(2):

# if str(i) not in config:

# break

model.add(Dense(32))

model.add(LeakyReLU())

model.add(Dense(1))

model.compile(optimizer='adam', loss='mse', metrics=[

max_absolute_error, 'mse', 'mae'])

model.fit(keys, labels, epochs=100, batch_size=32, verbose=1)

return model