As a web developer, I am growing increasingly interested in data science/machine learning, enough that I have decided to build a lab at home.

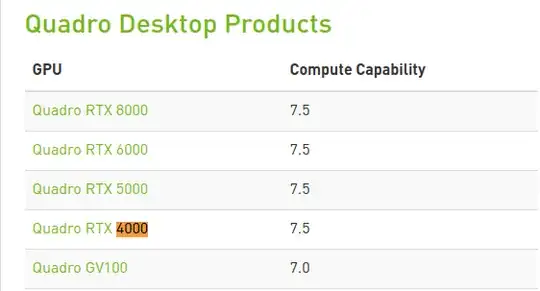

I have discovered the Quadro RTX 4000, and am wondering how well it would run ML frameworks on Ubuntu Linux. Are the correct drivers available on Linux so that this card can take advantage of ML frameworks?

LINUX X64 (AMD64/EM64T) DISPLAY DRIVER

This is the only driver that I could find, but it is a "Display Driver", so I am not sure if that enables ML frameworks to use this GPU for acceleration. Will it work for Intel based processors?

Any guidance would be greatly appreciated.