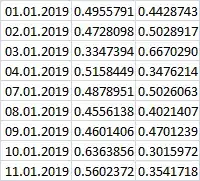

Even if input to a neural netwrk are scaled or normalised, the raw output values can still go outside of that range.

In your case, the output values are being interpreted as to make a binary YES/NO decision, but the raw values cannot necessarily be interpreted as raw probabilities! They are merely the final activations of the network.

To get what you expect, the final activations are usually passed through a softmax function, which essentially squashes the values you see in your table to sum to 1 on each row - this allows us to treat them as probabilities to make the final classification.

In practice, this means simply adding the softmax activation to your final Dense layer in Keras (activation="softmax") and then compile the model using:

loss="categorical_crossentropy"