Problem Statement: I have problem making the Entity Embedding of Categorical Variable works for a simple dataset. I have followed the original github, or paper, or other blogposts[1,2,or this 3], or this Kaggle kernel; still not working.

Data Part: I am using the Ames Housing dataset as was hosted in Kaggle. I'm loading it in pandas dataframe as:

url = 'http://www.amstat.org/publications/jse/v19n3/decock/AmesHousing.xls'

# Load the file into a Pandas DataFrame

data_df = pd.read_excel(url)

For simplicity, out of 81 independent features, I am ONLY taking the Neighborhood, which is categorical, and the Gr Liv Area, which is numerical. And SalePrice, which is our target. I also split the data into train, and test and normalize the numerical variables.

features = ['Neighborhood','Gr Liv Area']

target = ['SalePrice']

data_df=data_df[features + target]

X_train, y_train = data_df.iloc[:2000][features], data_df.iloc[:2000][target]

X_test = data_df.iloc[2000:][features]

X_train['Gr Liv Area']=StandardScaler().fit_transform(X_train['Gr Liv Area'].reshape(-1, 1))

y_train=StandardScaler().fit_transform(y_train)

Embedding Neural Net: Here is the block of code where I am building the Entity Embedding Neural Net including both the categorical and numerical variables. In Entity Embedding, there is a particular hyperparamter that defines the embedding size (as we have in NLP). Here I am using of the above-mentioned blogpost strategy to choose that.

input_models=[]

output_embeddings=[]

numerics = ['int16', 'int32', 'int64', 'float16', 'float32', 'float64']

for categorical_var in X_train.select_dtypes(include=['object']):

#Name of the categorical variable that will be used in the Keras Embedding layer

cat_emb_name= categorical_var.replace(" ", "")+'_Embedding'

# Define the embedding_size

no_of_unique_cat = X_train[categorical_var].nunique()

embedding_size = int(min(np.ceil((no_of_unique_cat)/2), 50 ))

vocab = no_of_unique_cat+1

#One Embedding Layer for each categorical variable

input_model = Input(shape=(1,))

output_model = Embedding(vocab, embedding_size, name=cat_emb_name)(input_model)

output_model = Reshape(target_shape=(embedding_size,))(output_model)

#Appending all the categorical inputs

input_models.append(input_model)

#Appending all the embeddings

output_embeddings.append(output_model)

#Other non-categorical data columns (numerical).

#I define single another network for the other columns and add them to our models list.

input_numeric = Input(shape=(len(X_train.select_dtypes(include=numerics).columns.tolist()),))

embedding_numeric = Dense(64)(input_numeric)

input_models.append(input_numeric)

output_embeddings.append(embedding_numeric)

#At the end we concatenate altogther and add other Dense layers

output = Concatenate()(output_embeddings)

output = Dense(500, kernel_initializer="uniform")(output)

output = Activation('relu')(output)

output = Dense(256, kernel_initializer="uniform")(output)

output = Activation('relu')(output)

output = Dense(1, activation='sigmoid')(output)

model = Model(inputs=input_models, outputs=output)

model.compile(loss='mean_squared_error', optimizer='Adam',metrics=['mse','mape'])

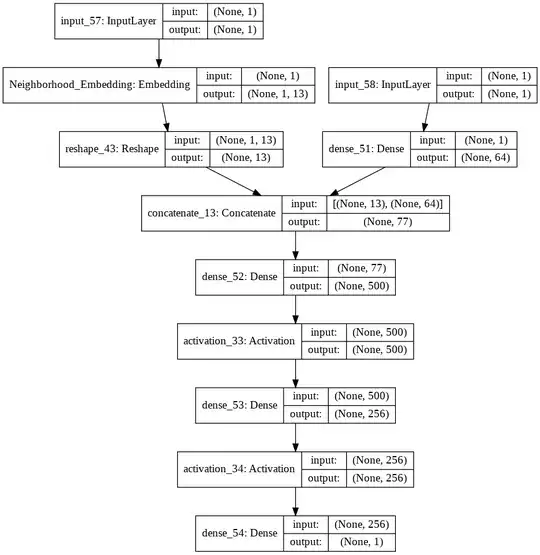

At the end, the model looks like this:

This look OK to me, unless I'm missing sth. Anyway, when I'm training the model like below:

history = model.fit(X_train,y_train , epochs = 200 , batch_size = 16, verbose= 2)

I get a rather usual keras error:

ValueError: Error when checking model input: the list of Numpy arrays that you are passing to your model is not the size the model expected. Expected to see 2 array(s), but instead got the following list of 1 arrays: [array([['NAmes', 0.31507361227175135],

['NAmes', -1.2242024755540366],

['NAmes', -0.3472201781480285],

...,

Then I looked more carefully at the original github or that Kaggle kernel, I noticed one has to convert the data to list format to match the network structure (still I am not sure I fully understand WHY!, see the preproc function there). Anyway, I convert my data to the list format like:

X_train_list = []

for i,column in enumerate(X_train.columns.tolist()):

X_train_list.append(X_train.values[..., [i]])

Now when trying to train once again this time using the list format of the data i.e. X_train_list:

history = model.fit(X_train_list,y_train , epochs = 200 , batch_size = 16, verbose= 2)

This time it starts with the first Epoch, then immediately stops with the following error:

ValueError: could not convert string to float: 'Mitchel'

It is rather obvious that it complains about one of the categories of the only Neighborhood variable that I have not encoded! Sure I have not, I thought that was the whole purpose of the Entity Embedding that the networks initiates a random embedding weights and learn the best embedding of that categorical variable during optimization of the target. Super confused!! Any help is much appreciated.