Despite the downvote, the question is clear, and a common one I'm sure most stumble across after doing machine learning work for some time.

The goal was to make a stronger predictive model from multiple trained models.

Quote from my question:

is it possible to aggregate these 30 fitted models into a single

model?

Answer: yes but there's no good functionality that allows you to do this in sklearn.

Verbose answer:

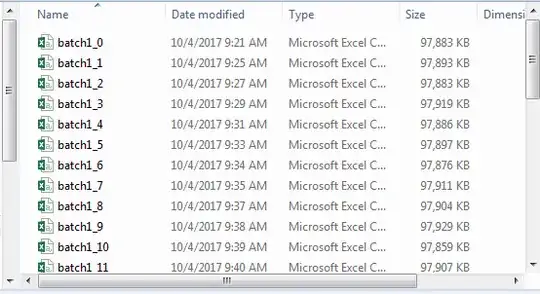

Imagine you have 30 CSV files that contain 15,000 0 class and 15,000 1 class samples. In other words, an equally balanced number of binary responses (no class imbalance). I generated these files myself because 1) the size of the data I'm working with is too big to fit into memory (point #1 of my original question) and 2) contains 97% class 0 and 3% class 1 (large class imbalance). My goal is to see if it's even possible to distinguish between a 0 or a 1 if the class imbalance issue was removed from the equation. If there were distinguishing features found, I'd want to know what those features were.

To generate each batch, I grabbed 15,000 1 responses, and randomly sampled from the 97% 15,000 more samples, joined into one dataset (30,000 samples total), then shuffled them at random.

I then went through each batch and trained an XGBClassifier() using optimal parameters found from GridSearchCV() for each. I then saved the model to disk (using Python's pickle capabilities).

At this point, you have 30 saved models.

/models/ directory looks like this:

['model_0.pkl', 'model_1.pkl', 'model_10.pkl', 'model_11.pkl', 'model_12.pkl',

'model_13.pkl', 'model_14.pkl', 'model_15.pkl', 'model_16.pkl', 'model_17.pkl',

'model_18.pkl', 'model_19.pkl', 'model_2.pkl', 'model_20.pkl', 'model_21.pkl',

'model_22.pkl', 'model_23.pkl', 'model_24.pkl', 'model_25.pkl', 'model_26.pkl',

'model_27.pkl', 'model_28.pkl', 'model_29.pkl', 'model_3.pkl', 'model_4.pkl',

'model_5.pkl', 'model_6.pkl', 'model_7.pkl', 'model_8.pkl', 'model_9.pkl']

Again, going back to the original question - is it possible to aggregate these into a single model? I wrote a pretty basic python function for this.

def xgb_predictions(X):

''' returns predictions from 30 saved models '''

predictions = {}

for pkl_file in os.listdir('./models/'):

file_num = int(re.search(r'\d+', pkl_file).group())

xgb = pickle.load(open(os.path.join('models', pkl_file), mode='rb'))

y_pred = xgb.predict(X)

predictions[file_num] = y_pred

new_df = pd.DataFrame(predictions)

new_df = new_df[sorted(new_df.columns)]

return new_df

It iterates through each file in a directory of saved models and loads them one at a time casting its own "vote" so-to-speak. The end result is a new dataframe of predictions.

Since this new dataset is smaller (X.shape x 30), it will fit into memory. I iterate through each batch file calling xgb_predictions(X), getting a dataframe of predictions, add the y column to the dataframe, and append it to a new file. I then read the full dataset of predictions and create a "level 2" model instance where X is the prediction data and y is still y.

So to recap, the concept is, for binary classification, create equally balanced class datasets, train a model on each, run through each dataset and let each trained model cast a prediction. This collection of predictions is, in a way, a transformed version of your original dataset. You build another model to try and predict y given the predictions dataset. Train it all in memory so you end up with a single model. Any time you want to predict, you take your dataset, transform it into predictions, then use your level 2 trained model to cast the final prediction.

This technique performed much better than any other single model did or even stacking with UNTRAINED prior models.