The most pythonic way of doing this would be (and running this twice, as a nested loop)

>>> import numpy as np

>>> from sklearn.model_selection import ShuffleSplit

>>> X = np.array([[1, 2], [3, 4], [5, 6], [7, 8], [3, 4], [5, 6]])

>>> y = np.array([1, 2, 1, 2, 1, 2])

>>> rs = ShuffleSplit(n_splits=5, test_size=.25, random_state=0)

>>> rs.get_n_splits(X)

5

>>> print(rs)

ShuffleSplit(n_splits=5, random_state=0, test_size=0.25, train_size=None)

>>> for train_index, test_index in rs.split(X):

... print("TRAIN:", train_index, "TEST:", test_index)

TRAIN: [1 3 0 4] TEST: [5 2]

TRAIN: [4 0 2 5] TEST: [1 3]

TRAIN: [1 2 4 0] TEST: [3 5]

TRAIN: [3 4 1 0] TEST: [5 2]

TRAIN: [3 5 1 0] TEST: [2 4]

>>> rs = ShuffleSplit(n_splits=5, train_size=0.5, test_size=.25,

... random_state=0)

>>> for train_index, test_index in rs.split(X):

... print("TRAIN:", train_index, "TEST:", test_index)

TRAIN: [1 3 0] TEST: [5 2]

TRAIN: [4 0 2] TEST: [1 3]

TRAIN: [1 2 4] TEST: [3 5]

TRAIN: [3 4 1] TEST: [5 2]

TRAIN: [3 5 1] TEST: [2 4]

Scikit learn now provides a much more detailed way of doing cross-validation:https://scikit-learn.org/stable/modules/cross_validation.html#cross-validation-iterators

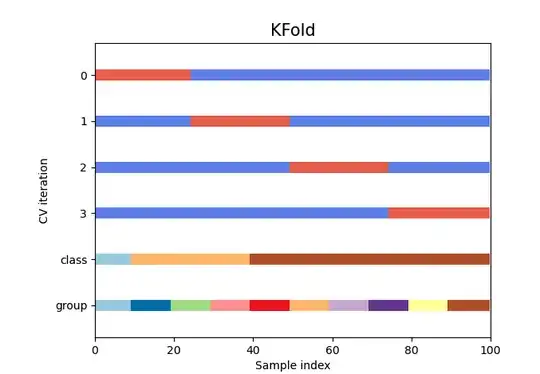

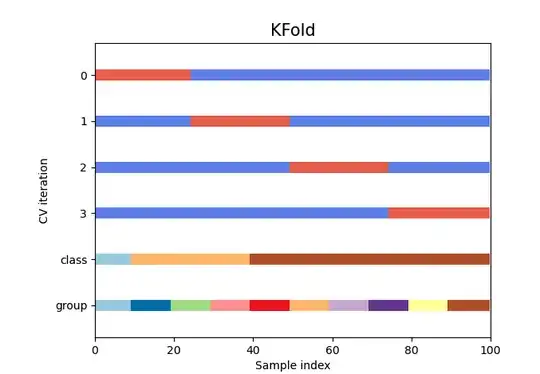

There is also the option of KFold that might be what you are looking for:

>>> import numpy as np

>>> from sklearn.model_selection import RepeatedKFold

>>> X = np.array([[1, 2], [3, 4], [1, 2], [3, 4]])

>>> random_state = 12883823

>>> rkf = RepeatedKFold(n_splits=2, n_repeats=2, random_state=random_state)

>>> for train, test in rkf.split(X):

... print("%s %s" % (train, test))

...

[2 3] [0 1]

[0 1] [2 3]

[0 2] [1 3]

[1 3] [0 2]

They also now provide graphics that will allow you to visualize the type of train-test split that you are looking for (there are more types of train test split than random)