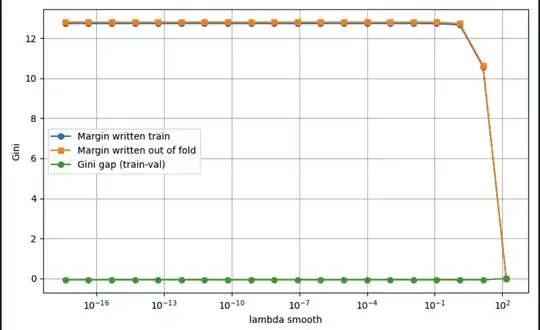

I'm trying to fit a GLM with smoothness penalization and see how out-of-fold Gini behaves when increasing penalization so I could get the optimal smoothness parameter value for my model. I expected an increasing then decreasing curve since it will first overfit with low penalization then underfit but I got a monotonous decreasing curve of out-of-fold Gini as a function of smoothness parameter that highly matches the train Gini one (but not identical). I've tried using very small smoothness parameter values but it didn't work. I've checked for data leakage but there is none. I'm looking for possible explanations to this.

My dataset contains 640k rows approximately, with 9 variables (all categorical) and 4 folds cross validation  The gini I used for the curves is that of the first fold.

I'm working with python. Dataset is from insurance clients data but it is synthetic (for demo purposes)

The gini I used for the curves is that of the first fold.

I'm working with python. Dataset is from insurance clients data but it is synthetic (for demo purposes)

Asked

Active

Viewed 22 times

0

Iti

- 1

- 1