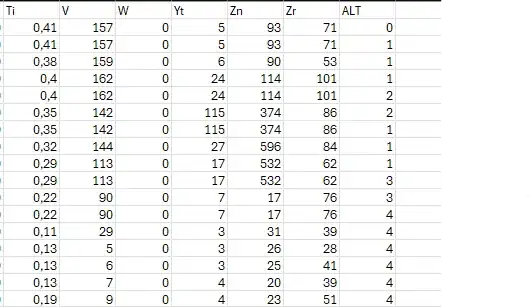

I'm trying to learn machine learning. I understood how to train a machine learning model when there are only two labels. But I can't understand how if there are more than two labels, like how to make it in the graph? For example, like determining the type of rock based on the chemical content in it, at first I tried the KNN method, but then I was confused about how to proceed, do I have to use pythagoras by squaring the difference of the squares of each data, or maybe there is another approach that is easier to solve this problem? Here's an example of the data I'm referring to. I would be happy if you could help me.

- 41

- 2

1 Answers

For multiclass classification (like identifying rock types based on chemical content), here is how you can approach it:

Exploratory the Data (EDA)

Start by understanding the structure and quality of your data:

Data types: Are features numeric, categorical, or mixed?

Missing values: Handle them with imputation or removal.

Class balance: Check if some rock types are underrepresented.

Univariate analysis: Look at feature distributions using histograms or boxplots.

Bivariate/multivariate analysis: Use pairplots, correlation heatmaps, or 3D scatter plots to explore relationships between features and labels.

Try dimensionality reduction (PCA) to project high-dimensional data into 2D/3D for better visualization of class separation.

Use ANOVA or other tests to see which features differ across classes, helps in understanding what matters.

Start with a multiclass logistic regression. which is simple, interpretable, and often surprisingly effective. You can later try models like KNN, Random Forest, or XGBoost depending on performance.

If you use KNN, you don’t need to manually apply the pythagorean theorem. libraries like sklearn handle it. Just scale your features (with StandardScaler or MinMaxScaler) before fitting.

For deeper modeling strategies, check Frank Harrell’s book.

- 747

- 1

- 16