We have ~30 audio snippets, of which around 50% are from the same speaker, who is our target speaker, and the rest are from various different speakers. We want to extract all audio snippets from our target speaker, so basically figure out which voice most frequently occurs and then select all audio samples with this voice.

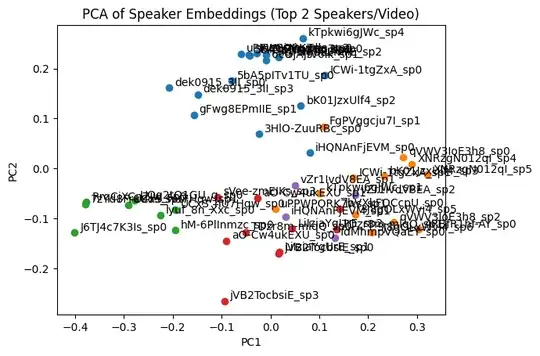

For this purpose, we tried using the resemblyzer library to generate speaker-level embeddings from our audio samples, and then apply PCA to see if we can detect any clusters:

from resemblyzer import VoiceEncoder

encoder = VoiceEncoder()

embeddings = []

for snippet in audio_snippets: # audio_snippets is a list numpy representations of our recordings

embeddings.append(encoder.embed_utterance(snippet, return_partials=False))

from sklearn.cluster import AgglomerativeClustering

from sklearn.decomposition import PCA

import matplotlib.pyplot as plt

clustering = AgglomerativeClustering(n_clusters=None, distance_threshold=0.8, linkage='ward')

labels = clustering.fit_predict(embeddings)

pca = PCA(n_components=2)

X_pca = pca.fit_transform(embeddings)

plt.figure()

for i, k in enumerate(embeddings):

x_val, y_val = X_pca[i, 0], X_pca[i, 1]

plt.scatter(x_val, y_val, color=f"C{labels[i]}")

plt.annotate(k, (x_val, y_val), xytext=(5, 2), textcoords="offset points")

plt.xlabel("PC1")

plt.ylabel("PC2")

plt.title("PCA of Speaker Embeddings (Top 2 Speakers/Video)")

plt.show()

From this, we would expect a clear cluster of 15 audio snippets, with the remaining 15 being loosely scattered all across. However, this does not cluster the snippets by speaker, as in most clusters there are still recordings of other speakers mixed in and it is generally not very accurate:

Is there a more effective way to accomplish this?